After 3G came 4G. After 4G comes 5G. After 5G comes 6G. The Shrivatsa of Technology.

This blog (over the next months a series of Blogs dedicated to 5G), “5G Economics – An Introduction”, has been a very long undertaking. In the making since 2014. Adding and then deleting as I change my opinion and then changed it again. The NGNM Alliance “NGMN 5G White Paper” (here after the NGMN whitepaper) by Rachid El Hattachi & Javan Erfanian has been both a source of great visionary inspiration as well as a source of great worry when it comes to the economical viability of their vision. Some of the 5G ideas and aspirations are truly moonshot in nature and would make the Singularity University very proud.

So what is the 5G Vision?

“5G is an end-to-end ecosystem to enable a fully mobile and connected society. It empowers value creation towards customers and partners, through existing and emerging use cases, delivered with consistent experience, and enabled by sustainable business models.” (NGMN 5G Vision, NGMN 5G whitepaper).

The NGMN 5G vision is not only limited to enhancement of the radio/air interface (although it is the biggest cost & customer experience factor). 5G seeks to capture the complete end-2-end telecommunications system architecture and its performance specifications. This is an important difference from past focus on primarily air interface improvements (e.g., 3G, HSPA, LTE, LTE-adv) and relative modest evolutionary changes to the core network architectural improvements (PS CN, EPC). In particular, the 5G vision provides architectural guidance on the structural separation of hardware and software. Furthermore, it utilizes the latest development in software defined telecommunications functionality enabled by cloudification and virtualization concepts known from modern state-of-the art data centers. The NGMN 5G vision most likely have accepted more innovation risk than in the past as well as being substantially more ambitious in both its specifications and the associated benefits.

“To boldly go where no man has gone before”

In the following, I encourage the reader to always keep in the back of your mind; “It is far easier to criticize somebody’s vision, than it is to come with the vision yourself”. I have tons of respect for the hard and intense development work, that so far have been channeled into making the original 5G vision into a deployable technology that will contribute meaningfully to customer experience and the telecommunications industry.

For much of the expressed concerns in this blog and in other critiques, it is not that those concerns have not been considered in the NGMN whitepaper and 5G vision, but more that those points are not getting much attention.

The cellular “singularity”, 5G that is, is supposed to hit us by 2020. In only four years. Americans and maybe others, taking names & definitions fairly lightly, might already have “5G” ( a l’Americaine) in a couple of years before the real thing will be around.

The 5G Vision is a source of great inspiration. The 5G vision will (and is) requiring a lot of innovation efforts, research & development to actually deliver on what for most parts are very challenging improvements over LTE.

My own main points of concern are in particular towards the following areas;

- Obsession with very high sustainable connection throughputs (> 1 Gbps).

- Extremely low latencies (1 ms and below).

- Too little (to none) focus on controlling latency variation (e.g., jitter), which might be of even greater importance than very low latency (<<10 ms) in its own right. I term this network predictability.

- Too strong focus on frequencies above 3 GHz in general and in particular the millimeter wave range of 30 GHz to 300 GHz.

- Backhaul & backbone transport transformation needed to support the 5G quantum leap in performance has been largely ignored.

- Relative weak on fixed – mobile convergence.

Not so much whether some of the above points are important or not .. they are of course important. Rather it is a question of whether the prioritization and focus is right. A question of channeling more efforts into very important (IMO) key 5G success factors, e.g., transport, convergence and designing 5G for the best user experience (and infinitely faster throughput per user is not the answer) ensuring the technology to be relevant for all customers and not only the ones who happens to be within coverage of a smallest cell.

Not surprisingly the 5G vision is a very mobile system centric. There is too little attention to fixed-mobile convergence and the transport solutions (backhaul & backbone) that will enable the very high air-interface throughputs to be carried through the telecoms network. This is also not very surprising as most mobile folks, historically did not have to worry too much about transport at least in mature advanced markets (i.e., the solutions needed was there without innovation an R&D efforts).

However, this is a problem. The required transport upgrade to support the 5G promises is likely to be very costly. The technology economics and affordability aspects of what is proposed is still very much work in progress. It is speculated that new business models and use cases will be enabled by 5G. So far little has been done in quantifying those opportunities and see whether those can justify some of the incremental cost that surely operators will incur as the deploy 5G.

CELLULAR CAPACITY … IT WORKS FOR 5G TOO!

To create more cellular capacity measured in throughput is easy or can be made so with a bit of approximations. “All” we need is an amount of frequency bandwidth Hz, an air-interface technology that allow us to efficiently carry a certain amount of information in bits per second per unit bandwidth per capacity unit (i.e., we call this spectral efficiency) and a number of capacity units or multipliers which for a cellular network is the radio cell. The most challenging parameter in this game is the spectral efficiency as it is governed by the laws of physics with a hard limit (actually silly me … bandwidth and capacity units are obviously as well), while a much greater degree of freedom governs the amount of bandwidth and of course the number of cells.

Spectral efficiency is given by the so-called Shannon’s Law (for the studious inclined I recommend to study his 1948 paper “A Mathematical Theory of Communications”). The consensus is that we are very close to the Shannon Limit in terms of spectral efficiency (in terms of bits per second per Hz) of the cellular air-interface itself. Thus we are dealing with diminishing returns of what can be gained by further improving error correction, coding and single-input single-output (SISO) antenna technology.

I could throw more bandwidth at the capacity problem (i.e., the reason for the infatuation with the millimeter wave frequency range as there really is a lot available up there at 30+ GHz) and of course build a lot more cell sites or capacity multipliers (i.e., definitely not very economical unless it results in a net positive margin). Of course I could (and most likely will if I had a lot of money) do both.

I could also try to be smart about the spectral efficiency and Shannon’s law. If I could reduce the need for or even avoid building more capacity multipliers or cell sites, by increasing my antenna system complexity it is likely resulting in very favorable economics. It turns out that multiple antennas acts as a multiplier (simplistic put) for the spectral efficiency compared to a simple single (or legacy) antenna system. Thus, the way to improve the spectral efficiency inevitable leads us to substantially more complex antenna technologies (e.g., higher order MiMo, massive MiMo, etc…).

Building new cell sites or capacity multiplier should always be the last resort as it is most likely the least economical option available to boost capacity.

Thus we should be committing increasingly more bandwidth (i.e., 100s – 1000s of Mhz and beyond) assuming it is available (i.e, if not we are back to adding antenna complexity and more cell sites). The need for very large bandwidths, in comparison with what is deployed in today’s cellular systems, automatically forces the choices into high frequency ranges, i.e., >3 GHz and into the millimeter wave range of above 30 GHz. The higher frequency band leads in inevitably to limited coverage and a high to massive demand for small cell deployment.

Yes! It’s a catch 22 if there ever was one. The higher carrier frequency increases the likelihood of more available bandwidth. higher carrier frequency also results in a reduced the size of our advanced complex antenna system (which is good). Both boost capacity to no end. However, my coverage area where I have engineered the capacity boost reduces approx. with the square of the carrier frequency.

Clearly, ubiquitous 5G coverage at those high frequencies (i.e., >3 GHz) would be a very silly endeavor (to put it nicely) and very un-economical.

5G, as long as the main frequency deployed is in the high or very high frequency regime, would remain a niche technology. Irrelevant to a large proportion of customers and use cases.

5G needs to be macro cellular focused to become relevant for all customers and economically beneficial to most use cases.

THE CURIOUS CASE OF LATENCY.

The first time I heard about the 5G 1 ms latency target (communicated with a straight face and lots of passion) was to ROFL. Not a really mature reaction (mea culpa) and agreed, many might have had the same reaction when J.F. Kennedy announced to put a man on the moon and safely back on Earth within 10 years. So my apologies for having had a good laugh (likely not the last to laugh though in this matter).

In Europe, the average LTE latency is around 41±9 milliseconds including pinging an external (to the network) server but does not for example include the additional time it takes to load a web page or start a video stream. The (super) low latency (1 ms and below) poses other challenges but at least relevant to the air-interface and a reasonable justification to work on a new air-interface (apart from studying channel models in the higher frequency regime). The best latency, internal to the mobile network itself, you can hope to get out of “normal” LTE as it is commercially deployed is slightly below 20 ms (without considering re-transmission). For pre-allocated LTE this can further be reduced towards the 10 ms (without considering re-transmission which adds at least 8 ms). In 1 ms light travels ca. 200 km (in optical fiber). To support use cases requiring 1 ms End-2-End latency, all transport & processing would have to be kept inside the operators network. Clearly, the physical transport path to the location, where processing of the transported data would occur, would need to be very short to guaranty 1 ms. The relative 5G latency improvement over LTE would need to be (much) better than 10 (LTE pre-allocated) to 20 times (scheduled “normal” LTE), ignoring re-transmission (which would only make the challenge bigger.

An example. Say that 5G standardization folks gets the latency down to 0.5 ms (vs the ~ 20 – 10 ms today), the 5G processing node (i.e., Data Center) cannot be more than 50 km away from the 5G-radio cell (i..e, it takes light ca. 0.5 ms travel 100 km in fiber). This latency (budget) challenge has led the Telco industry to talk about the need for so-called edge computing and the need for edge data centers to provide the 5G promise of very low latencies. Remember this is opposing the past Telco trend of increasing centralization of computing & data processing resources. Moreover, it is bound to lead to incremental cost. Thus, show me the revenues.

There is no doubt that small, smaller and smallest 5G cells will be essential for providing the very lowest latencies and the smallness is coming for “free” given the very high frequencies planned for 5G. The cell environment of a small cell is more ideal than a macro-cellular harsh environment. Thus minimizing the likelihood of re-transmission events. And distances are shorter which helps as well.

I believe that converged telecommunications operators, are in a better position (particular compared to mobile only operations) to leverage existing fixed infrastructure for a 5G architecture relying on edge data centers to provide very low latencies. However, this will not come for free and without incremental costs.

How much faster is fast enough from a customer experience perspective? According with John Carmack, CTO of Oculus Rift, “.. when absolute delays are below approximately 20 milliseconds they are generally imperceptible.” particular as it relates to 3D systems and VR/AR user experience which is a lot more dynamic than watching content loading. According to recent research specific to website response time indicates that anything below 100 ms wil be perceived as instantaneous. At 1 second users will sense the delay but would be perceived as seamless. If a web page loads in more than 2 seconds user satisfaction levels drops dramatically and a user would typically bounce. Please do note that most of this response or download time overhead has very little to do with connection throughput, but to do with a host of other design and configuration issues. Cranking up the bandwidth will not per se solve poor browsing performance.

End-2-End latency in the order of 20 ms are very important for a solid high quality VR user experience. However, to meet this kind of performance figure the VR content needs to be within the confines for the operator’s own network boundaries.

End-2-End (E2E) latencies of less than 100 ms would in general be perceived as instantaneous for normal internet consumption (e.g., social media, browsing, …). However that this still implies that operators will have to focus on developing internal to their network’s latencies far below the over-all 100 ms target and that due to externalities might try to get content inside their networks (and into their own data centers).

A 10-ms latency target, while much less moonshot, would be a far more economical target to strive for and might avoid substantial incremental cost of edge computing center deployments. It also resonates well with the 20 ms mentioned above, required for a great VR experience (leaving some computing and process overhead).

The 1-ms vision could be kept for use cases involving very short distances, highly ideal radio environment and with compute pretty much sitting on top of the whatever needs this performance, e.g., industrial plants, logistic / warehousing, …

Finally, the targeted extreme 5G speeds will require very substantial bandwidths. Such large bandwidths are readily available in the high frequency ranges (i.e., >3 GHz). The high frequency domain makes a lot of 5G technology challenges easier to cope with. Thus cell ranges will be (very) limited in comparison to macro cellular ones, e.g., Barclays Equity Research projects 10x times more cells will be required for 5G (10x!). 5G coverage will not match that of the macro cellular (LTE) network. In which case 5G will remain niche. With a lot less relevance to consumers. Obviously, 5G will have to jump the speed divide (a very substantial divide) to the macro cellular network to become relevant to the mass market. Little thinking appears to be spend on this challenge currently.

THE VERY FINE ART OF DETECTING MYTH & BALONEY.

Carl Sagan, in his great article The Fine Art of Baloney Detection, states that one should “Try not to get overly attached to a hypothesis just because it’s yours.”. Although Carl Sagan starts out discussing the nature of religious belief and the expectations of an afterlife, much of his “Baloney Detection Kit” applies equally well to science & technology. In particular towards our expert expectations towards consumerism and its most likely demand. After all, isn’t Technology in some respects our new modern day religion?

Some might have the impression that expectations towards 5G, is the equivalent of a belief in an afterlife or maybe more accurately resurrection of the Telco business model to its past glory. It is almost like a cosmic event, where after entropy death, the big bang gives birth to new, and supposedly unique (& exclusive) to our Telco industry, revenue streams that will make all alright (again). There clearly is some hype involved in current expectations towards 5G, although the term still has to enter the Gartner hype cycle report (maybe 2017 will be the year?).

The cynic (mea culpa) might say that it is in-evitable that there will be a 5G after 4G (that came after 3G (that came after 2G)). We also would expect 5G to be (a lot) better than 4G (that was better than 3G, etc..).

so …

Well … Better for who? … Better for Telcos? Better for Suppliers? Better revenues? Their Shareholders? Better for our Consumers? Better for our Society? Better for (engineering) job security? … Better for Everyone and Everything? Wow! Right? … What does better mean?

- Better speed … Yes! … Actually the 5G vision gives me insanely better speeds than LTE does today.

- Better latency … Internal to the operator’s own network Yes! … Not per default noticeable for most consumer use cases relying on the externalities of the internet.

- Better coverage … well if operators can afford to provide 100% 5G coverage then certainly Yes! Consumers would benefit even at a persistent 50 Mbps level.

- Better availability …I don’t really think that Network Availability is a problem for the general consumer where there is coverage (at least not in mature markets, Myanmar absolutely … but that’s an infrastructure problem rather than a cellular standard one!) … Whether 100% availability is noticeable or not will depend a lot on the starting point.

- Better (in the sense of more) revenues … Work in Progress!

- Better margins … Only if incremental 5G cost to incremental 5G revenue is positive.

- etc…

Recently William Webb published a book titled “The 5G Myth: And why consistent connectivity is a better future” (reminder: a myth is a belief or set of beliefs, often unproven or false, that have accrued around a person, phenomenon, or institution). William Web argues;

- 5G vision is flawed and not the huge advance in global connectivity as advertised.

- The data rates promised by 5G will not be sufficiently valued by the users.

- The envisioned 5G capacity demand will not be needed.

- Most operators can simply not afford the cost required to realize 5G.

- Technology advances are in-sufficient to realize the 5G vision.

- Consistent connectivity is the more important aim of a 5G technology.

I recommend all to read William Webb’s well written and even better argued book. It is one for the first more official critiques of the 5G Vision. Some of the points certainly should have us pause and maybe even re-evaluate 5G priorities. If anything, it helps to sharpen 5G arguments.

Despite William Webb”s critique of 5G, one need to realize that a powerful technology vision of what 5G could be, even if very moonshot, does leapfrog innovation, needed to take a given technology too a substantially higher level, than what might otherwise be the case. If the 5G whitepaper by Rachid El Hattachi & Javan Erfanian had “just” been about better & consistent coverage, we would not have had the same technology progress independent of whether the ultimate 5G end game is completely reachable or not. Moreover, to be fair to the NGMN whitepaper, it is not that the whitepaper does not consider consistent connectivity, it very much does. It is more a matter of where lies the main attention of the industry at this moment. That attention is not on consistent connectivity but much more on niche use cases (i.e., ultra high bandwidth at ultra low latencies).

Rest assured, over the next 10 to 15 years we will see whether William Webb will end up in the same category as other very smart in the know people getting their technology predictions proven wrong (e.g., IBM Chairman Thomas Watson’s famous 1943 quote that “… there is a world market for maybe five computers.” and NO! despite claims of the contrary Bill Gates never said “640K of memory should be enough for anybody.”).

Another, very worthy 5G analysis, also from 2016, is the Barclays Equity Research “5G – A new Dawn” (September 2016) paper. The Barclays 5G analysis concludes ;

- Mobile operator’s will need 10x more sites over the next 5 to 10 years driven by 5G demand.

- There will be a strong demand for 5G high capacity service.

- The upfront cost for 5G will be very substantial.

- The cost of data capacity (i.e., Euro per GB) will fall approx. a factor 13 between LTE and 5G (note: this is “a bit” of a economic problem when capacity is supposed to increase a factor 50).

- Sub-scale Telcos, including mobile-only operations, may not be able to afford 5G (note: this point, if true, should make the industry very alert towards regulatory actions).

- Having a modernized super-scalable fixed broadband transport network likely to be a 5G King Maker (note: Its going to be great to be an incumbent again).

To the casual observer, it might appear that Barclays is in strong opposition to William Webb’s 5G view. However, maybe that is not completely so.

If it is true, that only very few Telco’s, primarily modernized incumbent fixed-mobile Telco’s, can afford to build 5G networks, one might argue that the 5G Vision is “somewhat” flawed economically. The root cause for this assumed economical flaw (according with Barclays, although they do not point out it is a flaw!) clearly is the very high 5G speeds, assumed to be demanded by the user. Resulting in massive increase in network densification and need for radically modernized & re-engineered transport networks to cope with this kind of demand.

Barclays assessments are fairly consistent with the illustration shown below of the likely technology cost impact, showing the challenges a 5G deployment might have;

Some of the possible operational cost improvements in IT, Platforms and Core shown in the above illustration arises from the natural evolving architectural simplifications and automation strategies expected to be in place by the time of the 5G launch. However, the expected huge increase in small cells are the root cause of most of the capital and operational cost pressures expected to arise with 5G. Depending on the original state of the telecommunications infrastructure (e.g., cloudification, virtualization,…), degree of transport modernization (e.g., fiberization), and business model (e.g., degree of digital transformation), the 5G economical impact can be relative modest (albeit momentarily painful) to brutal (i.e., little chance of financial return on investment). As discussed in the Barclays “5G – A new dawn” paper.

Furthermore, if the relative cost of delivering a 5G Byte is 13 – 14 times lower than an LTE Byte, and the 5G capacity demand is 50 times higher than LTE, the economics doesn’t work out very well. So if I can produce a 5G Byte at 1/14th of an LTE Byte, but my 5G Byte demand is 50x higher than in LTE, I could (simplistically) end up with more than 3x more absolute cost for 5G. That’s really Ugly! Although if Barclays are correct in the factor 10 higher number of 5G sites, then a (relevant) cost increase of factor 3 doesn’t seem completely unrealistic. Of course Barclays could be wrong! Unfortunately, an assessment of the incremental revenue potential has yet to be provided. If the price for a 5G Byte could be in excess of a factor 3 of an LTE Byte … all would be cool!

If there is something to be worried about, I would worry much more about the Barclays 5G analysis than the challenges of William Webb (although certainly somehow intertwined).

What is the 5G market potential in terms of connections?

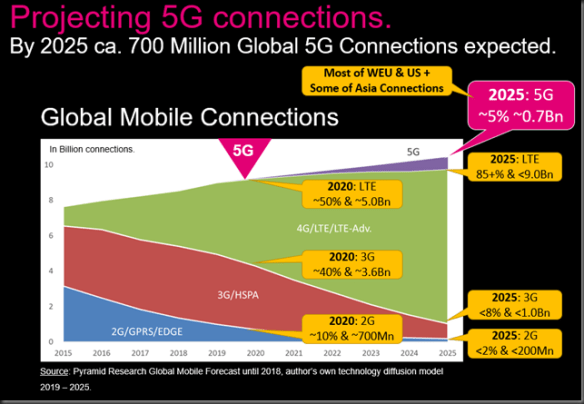

At this moment very few 5G market uptake forecasts have yet made it out in the open. However, taking the Strategy Analytics August 2016 5G FC of ca. 690 million global 5G connections by year 2025 we can get an impression of how 5G uptake might look like;

Caution! Above global mobile connection forecast is likely to change many time as we approaches commercial launch and get much better impression of the 5G launch strategies of the various important players in the Telco Industry. In my own opinion, if 5G will be launched primarily in the mm-wave bands around and above 30 GHz, I would not expect to see a very aggressive 5G uptake. Possible a lot less than the above (with the danger of putting myself in the category of badly wrong forecasts of the future). If 5G would be deployed as an overlay to existing macro-cellular networks … hmmm who knows! maybe above would be a very pessimistic view of 5G uptake?

THE 5G PROMISES (WHAT OTHERS MIGHT CALL A VISION).

Let’s start with the 5G technology vision as being presented by NGMN and GSMA.

GSMA (Groupe Speciale Mobile Association) 2014 paper entitled ‘Understanding 5G: Perspective on future technology advancements in mobile’ have identified 8 main requirements;

1. 1 to 10 Gbps actual speed per connection at a max. of 10 millisecond E2E latency.

Note 1: This is foreseen in the NGMN whitepaper only to be supported in dense urban areas including indoor environments.

Note 2: Throughput figures are as experienced by the user in at least 95% of locations for 95% of the time.

Note 3: In 1 ms speed the of light travels ca. 200 km in optical fiber.

2. A Minimum of 50 Mbps per connection everywhere.

Note 1: this should be consistent user experience outdoor as well as indoor across a given cell including at the cell edge.

Note 2: Another sub-target under this promise was ultra-low cost Networks where throughput might be as low as 10 Mbps.

3. 1,000 x bandwidth per unit area.

Note: notice the term per unit area & think mm-wave frequencies; very small cells, & 100s of MHz frequency bandwidth. This goal is not challenging in my opinion.

4. 1 millisecond E2E round trip delay (tactile internet).

Note: The “NGMN 5G White Paper” does have most 5G use cases at 10 ms allowing for some slack for air-interface latency and reasonable distanced transport to core and/or aggregation points.

5. Massive device scale with 10 – 100 x number of today’s connected devices.

Note: Actually, if one believes in the 1 Million Internet of Things connections per km2 target this should be aimed close to 1,000+ x rather than the 100 x for an urban cell site comparison.

6. Perception of 99.999% service availability.

Note: ca. 5 minutes of service unavailability per year. If counted on active usage hours this would be less than 2.5 minutes per year per customer or less than 1/2 second per day per customer.

7. Perception of 100% coverage.

Note: In 2015 report from European Commission, “Broadband Coverage in Europe 2015”, for EU28, 86% of households had access to LTE overall. However, only 36% of EU28 rural households had access to LTE in 2015.

8. 90% energy reduction of current network-related energy consumption.

Note: Approx. 1% of a European Mobile Operator’s total Opex.

9. Up-to 10 years battery life for low-power Internet of Things 5G devices.

The 5G whitepaper also discusses new business models and business opportunities for the Telco industry. However, there is little clarity on what would be the relevant 5G business targets. In other words, what would 5G as a technology bring, in additional Revenues, in Churn reduction, Capex & Opex (absolute) Efficiencies, etc…

More concrete and tangible economical requirements are badly required in the 5G discussion. Without it, is difficult to see how Technology can ensure that the 5G system that will be developed is also will be relevant for the business challenges in 2020 and beyond.

Today an average European Mobile operator spends approx. 40 Euro in Total Cost of Ownership (TCO) per customer per anno on network technology (and slightly less on average per connection). Assuming a capital annualization rate of 5 years and about 15% of its Opex relates to Technology (excluding personnel cost).

The 40 Euro TCO per customer per anno sustains today an average LTE EU28 customer experience of 31±9 Mbps downlink speed @ 41±9 ms (i.e., based on OpenSignal database with data as of 23 December 2016). Of course this also provides for 3G/HSPA network sustenance and what remains of the 2G network.

Thus, we might have a 5G TCO ceiling at least without additional revenue. The maximum 5G technology cost per average speed (in downlink) of 1 – 10 Gbps @ 10 ms should not be more than 40 Euro TCO per customer per anno (i.e, and preferably a lot less at the time we eventually will launch 5G in 2020).

Thus, our mantra when developing the 5G system should be:

5G should not add additional absolute cost burden to the Telecom P&L.

and also begs the question of proposing some economical requirements to partner up with the technology goals.

5G ECONOMIC REQUIREMENTS (TO BE CONSIDERED).

- 5G should provide new revenue opportunities in excess of 20% of access based revenue (e.g., Europe mobile access based revenue streams by 2021 expected to be in the order of 160±20 Billion Euro; thus the 5G target for Europe should be to add an opportunity of ca. 30±5 Billion in new non-access based revenues).

- 5G should not add to Technology TCO while delivering up-to 10 Gbps @ 10 ms (with a floor level of 1 Gbps) in urban areas.

- 5G focus on delivering macro-cellular customer experience at minimum 50 Mbps @ maximum 10 ms.

- 5G should target 20% reduction of Technology TCO while delivering up-to 10 Gbps @ 10 ms (min. 1 Gbps).

- 5G should keep pursuing better spectral efficiency (i.e., Mbps/MHz/cell) not only through means antennas designs, e.g., n-order MiMo and Massive-MiMo, that are largely independent of the air-interface (i.e., works as well with LTE).

- Target at least 20% 5G device penetration within first 2 years of commercial launch (note: only after 20% penetration does the technology efficiency become noticeable).

In order not to increment the total technology TCO, we would at the very least need to avoid adding additional physical assets or infrastructure to the existing network infrastructure. Unless such addition provide a net removal of other physical assets and thus associated cost. This is in the current high frequency, and resulting demand for huge amount of small cells, going to be very challenging but would be less so by focusing more on macro cellular exploitation of 5G.

Thus, there need to be a goal to also overlay 5G on our existing macro-cellular network. Rather than primarily focus on small, smaller and smallest cells. Similar to what have been done for LT and was a much more challenge with UMTS (i.e., due to optimum cellular grid mismatch between the 2G voice-based and the 3G more data-centric higher frequency network).

What is the cost reference that should be kept in mind?

As shown below, the pre-5G technology cost is largely driven by access cost related to the number of deployed sites in a given network and the backhaul transmission.

Adding more sites, macro-cellular or a high number of small cells, will increase Opex and add not only a higher momentary Capex demand, but also burden future cash requirements. Unless equivalent cost can removed by the 5G addition.

Obviously, if adding additional physical assets leads to verifiable incremental margin, then accepting incremental technology cost might be perfectly okay (let”s avoid being radical financial controllers).

Though its always wise to remember;

Cost committed is a certainty, incremental revenue is not.

NAUGHTY … IMAGINE A 5G MACRO CELLULAR NETWORK (OHH JE!).

From the NGMN whitepaper, it is clear that 5G is supposed to be served everywhere (albeit at very different quality levels) and not only in dense urban areas. Given the economical constraints (considered very lightly in the NGMN whitepaper) it is obvious that 5G would be available across operators existing macro-cellular networks and thus also in the existing macro cellular spectrum regime. Not that this gets a lot of attention.

In the following, I am proposing a 5G macro cellular overlay network providing a 1 Gbps persistent connection enabled by massive MiMo antenna systems. This though experiment is somewhat at odds with the NGMN whitepaper where their 50 Mbps promise might be more appropriate. Due to the relative high frequency range in this example, massive MiMo might still be practical as a deployment option.

If you follow all the 5G news, particular on 5G trials in US and Europe, you easily could get the impression that mm-wave frequencies (e.g., 30 GHz up-to 300 GHz) are the new black.

There is the notion that;

“Extremely high frequencies means extremely fast 5G speeds”

which is baloney! It is the extremely large bandwidth, readily available in the extremely high frequency bands, that make for extremely fast 5G (and LTE of course) speeds.

We can have GHz bandwidths instead of MHz (i.e, 1,000x) to play with! … How extremely cool is that not? We totally can suck at fundamental spectral efficiency and still get out extremely high throughputs for the consumers data consumption.

While this mm-wave frequency range is very cool, from an engineering perspective and for sure academically as well, it is also extremely non-matching our existing macro-cellular infrastructure with its 700 to 2.6 GHz working frequency range. Most mobile networks in Europe have been build on a 900 or 1800 MHz fundamental grid, with fill in from UMTS 2100 MHz coverage and capacity requirements.

Being a bit of a party pooper, I asked whether it wouldn’t be cool (maybe not to the extreme … but still) to deploy 5G as an overlay on our existing (macro) cellular network? Would it not be economically more relevant to boost the customer experience across our macro-cellular networks, that actually serves our customers today? As opposed to augment the existing LTE network with ultra hot zones of extreme speeds and possible also an extreme number of small cells.

If 5G would remain an above 3 GHz technology, it would be largely irrelevant to the mass market and most use cases.

A 5G MACRO CELLULAR THOUGHT EXAMPLE.

So let’s be (a bit) naughty and assume we can free up 20MHz @ 1800 MHz. After all, mobile operators tend to have a lot of this particular spectrum anyway. They might also re-purpose 3G/LTE 2.1 GHz spectrum (possibly easier than 1800 MHz pending overall LTE demand).

In the following, I am ignoring that whatever benefits I get out of deploying higher-order MiMo or massive MiMo (mMiMo) antenna systems, will work (almost) equally well for LTE as it will for 5G (all other things being equal).

Remember we are after

- A lot more speed. At least 1 Gbps sustainable user throughput (in the downlink).

- Ultra-responsiveness with latencies from 10 ms and down (E2E).

- No worse 5G coverage than with LTE (at same frequency).

Of course if you happen to be a NGMN whitepaper purist, you will now tell me that I my ambition should only be to provide sustainable 50 Mbps per user connection. It is nevertheless an interesting thought exercise to explore whether residential areas could be served, by the existing macro cellular network, with a much higher consistent throughput than 50 Mbps that might ultimately be covered by LTE rather than needing to go to 5G. Anywhere both Rachid El Hattachi and Jarvan Erfenian knew well enough to hedge their 5G speed vision against the reality of economics and statistical fluctuation.

and I really don’t care about the 1,000x (LTE) bandwidth per unit area promise!

Why? The 1,000x promise It is fairly trivial promise. To achieve it, I simply need a high enough frequency and a large enough bandwidth (and those two as pointed out goes nicely hand in hand). Take a 100 meter 5G-cell range versus a 1 km LTE-cell range. The 5G-cell is 100 times smaller in coverage area and with 10x more 5G spectral bandwidth than for LTE (e.g., 200 MHz 5G vs 20 MHz LTE), I would have the factor 1,000 in throughput bandwidth per unit area. This without having to assume mMiMo that I could also choose to use for LTE with pretty much same effect.

Detour to the cool world of Academia: University of Bristol published recently (March 2016) a 5G spectral efficiency of ca. 80 Mbps/MHz in a 20 MHz channel. This is about 12 times higher than state of art LTE spectral efficiency. Their base station antenna system was based on so-called massive MiMo (mMiMo) with 128 antenna elements, supporting 12 users in the cell as approx. 1.6 Gbps (i.e., 20 MHz x 80 Mbps/MHz). The proof of concept system operated 3.5 GHz and in TDD mode (note: mMiMo does not scale as well for FDD and pose in general more challenges in terms of spectral efficiency). National Instruments provides a very nice overview of 5G MMiMo systems in their whitepaper “5G Massive MiMo Testbed: From Theory to Reality”.

A picture of the antenna system is shown below;

Figure above: One of the World’s First Real-Time massive MIMO Testbeds–Created at Lund University. Source: “5G Massive MiMo (mMiMo) Testbed: From Theory to Reality” (June 2016).

For a good read and background on advanced MiMo antenna systems I recommend Chockalingam & Sundar Rajan’s book on “Large MiMo Systems” (Cambridge University Press, 2014). Though there are many excellent accounts of simple MiMo, higher-order MiMo, massive MiMo, Multi-user MiMo antenna systems and the fundamentals thereof.

Back to naughty (i.e., my 5G macro cellular network);

So let’s just assume that the above mMiMO system, for our 5G macro-cellular network,

- Ignoring that such systems originally were designed and works best for TDD based systems.

- and keeping in mind that FDD mMiMo performance tends to be lower than TDD all else being equal.

will, in due time, be available for 5G with a channel of at least 20 MHz @ 1800MHz. And at a form factor that can be integrated well with existing macro cellular design without incremental TCO.

This is a very (VERY!) big assumption. Requirements of substantially more antenna space for massive MiMo systems, at normal cellular frequency ranges, are likely to result. Structural integrity of site designs would have to be checked and possibly be re-enforced to allow for the advanced antenna system, contributing to both additional capital cost and possible incremental tower/site lease.

So we have (in theory) a 5G macro-cellular overlay network with at least cell speeds of 1+Gbps, which is ca. 10 – 20 times that of today’s LTE networks cell performance (not utilizing massive MiMo!). If I have more 5G spectrum available, the performance would increase linearly (and a bit) accordingly.

The observant reader will know that I have largely ignored the following challenges of massive MiMo (see also Larsson et al’s “Massive MiMo for Next Generation Wireless Systems” 2014 paper);

- mMiMo designed for TDD, but works at some performance penalty for FDD.

- mMiMo will really be deployable at low total cost of ownership (i.e., it is not enough that the antenna system itself is low cost!).

- mMiMo performance leap frog comes at the price of high computational complexity (e.g., should be factored into the deployment cost).

- mMiMo relies on distributed processing algorithms which at this scale is relative un-exploited territory (i.e., should be factored into the deployment cost).

But wait a minute! I might (naively) theorize away additional operational cost of the active electronics and antenna systems on the 5G cell site (overlaid on legacy already present!). I might further assume that the Capex of the 5G radio & antenna system can be financed within the regular modernization budget (assuming such a budget exists). But … But surely our access and core transport networks have not been scaled for a factor 10 – 20 (and possibly a lot more than that) in crease in throughput per active customer?

No it has not! Really Not!

Though some modernized converged Telcos might be a lot better positioned for thefixed broadband transformation required to sustain the 5G speed promise.

For most mobile operators, it is highly likely that substantial re-design and investments of transport networks will have to be made in order to support the 5G target performance increase above and beyond LTE.

Definitely a lot more on this topic in a subsequent Blog.

ON THE 5G PROMISES.

Lets briefly examine the 8 above 5G promises or visionary statements and how these impact the underlying economics. As this is an introductory chapter, the deeper dive and analysis will be referred to subsequent chapters.

NEED FOR SPEED.

PROMISE 1: From 1 to 10 Gbps in actual experienced 5G speed per connected device (at a max. of 10 ms round-trip time).

PROMISE 2: Minimum of 50 Mbps per user connection everywhere (at a max. of 10 ms round-trip time).

PROMISE 3: Thousand times more bandwidth per unit area (compared to LTE).

Before anything else, it would be appropriate to ask a couple of questions;

“Do I need this speed?” (The expert answer if you are living inside the Telecom bubble is obvious! Yes Yes Yes ….Customer will not know they need it until they have it! …).

“that kind of sustainable speed for what?” (Telekom bubble answer would be! Lots of useful things! … much better video experience, 4K, 8K, 32K –> fully emerged holographic VR experience … Lots!)

“am I willing to pay extra for this vast improvement in my experience?” (Telekom bubble answer would be … ahem … that’s really a business model question and lets just have marketing deal with that later).

What is true however is:

My objective measurable 5G customer experience, assuming the speed-coverage-reliability promise is delivered, will quantum leap to un-imaginable levels (in terms of objectively measured performance increase).

Maybe more importantly, will the 5G customer experience from the very high speed and very low latency really be noticeable to the customer? (i.e, the subjective or perceived customer experience dimension).

Let’s ponder on this!

In Europe end of 2016, the urban LTE speed and latency user experience per connection would of course depend on which network the customer would be (not all being equal);

In 2016 on average in Europe an urban LTE user, experienced a DL speed of 31±9 Mbps, UL speed of 9±2 Mbps and latency around 41±9 milliseconds. Keep in mind that OpenSignal is likely to be closer to the real user’s smartphone OTT experience, as it pings a server external to the MNOs network. It should also be noted that although the OpenSignal measure might be closer to the real customer experience, it still does not provide the full experience from for example page load or video stream initialization and start.

The 31 Mbps urban LTE user experience throughput provides for a very good video streaming experience at 1080p (e.g., full high definition video) even on a large TV screen. Even a 4K video stream (15 – 32 Mbps) might work well, provided the connection stability is good and that you have the screen to appreciate the higher resolution (i.e., a lot bigger than your 5” iPhone 7 Plus). You are unlikely to see the slightest difference on your mobile device between the 1080p (9 Mbps) and 480p (1.0 – 2.3 Mbps) unless you are healthy young and/or with a high visual acuity which is usually reserved for the healthy & young.

With 5G, the DL speed is targeted to be at least 1 Gbps and could be as high as 10 Gbps, all delivered within a round trip delay of maximum 10 milliseconds.

5G target by launch (in 2020) is to deliver at least 30+ times more real experienced bandwidth (in the DL) compared to what an average LTE user would experience in Europe 2016. The end-2-end round trip delay, or responsiveness, of 5G is aimed to be at least 4 times better than the average experienced responsiveness of LTE in 2016. The actual experience gain between LTE and 3G has been between 5 – 10 times in DL speed, approx. 3 –5 times in UL and between 2 to 3 times in latency (i.e., pinging the same server exterior to the mobile network operator).

According with Sandvine’s 2015 report on “Global Internet Phenomena Report for APAC & Europe”, in Europe approx. 46% of the downstream fixed peak aggregate traffic comes from real-time entertainment services (e.g., video & audio streamed or buffered content such as Netflix, YouTube and IPTV in general). The same report also identifies that for Mobile (in Europe) approx. 36% of the mobile peak aggregate traffic comes from real-time entertainment. It is likely that the real share of real-time entertainment is higher, as video content embedded in social media might not be counted in the category but rather in Social Media. Particular for mobile, this would bring up the share with between 10% to 15% (more in line with what is actually measured inside mobile networks). Real-time entertainment and real-time services in general is the single most important and impacting traffic category for both fixed and mobile networks.

Video viewing experience … more throughput is maybe not better, more could be useless.

Video consumption is a very important component of real-time entertainment. It amounts to more than 90% of the bandwidth consumption in the category. The Table below provides an overview of video formats, number of pixels, and their network throughput requirements. The tabulated screen size is what is required (at a reasonable viewing distance) to detect the benefit of a given video format in comparison with the previous. So in order to really appreciate 4K UHD (ultra high definition) over 1080p FHD (full high definition), you would as a rule of thumb need double the screen size (note there are also other ways to improved the perceived viewing experience). Also for comparison, the Table below includes data for mobile devices, which obviously have a higher screen resolution in terms of pixels per inch (PPI) or dots per inch (DPI). Apart from 4K (~8 MP) and to some extend 8K (~33 MP), the 16K (~132 MP) and 32K (~528 MP) are still very yet exotic standards with limited mass market appeal (at least as of now).

We should keep in mind that there are limits to the human vision with the young and healthy having a substantial better visual acuity than what can be regarded as normal 20/20 vision. Most magazines are printed at 300 DPI and most modern smartphone displays seek to design for 300 DPI (or PPI) or more. Even Steve Jobs has addressed this topic;

However, it is fair to point out that this assumed human vision limitation is debatable (and have been debated a lot). There is little consensus on this, maybe with the exception that the ultimate limit (at a distance of 4 inch or 10 cm) is 876 DPI or approx. 300 DPI (at 11.5 inch / 30 cm).

Anyway, what really matters is the customers experience and what they perceive while using their device (e.g., smartphone, tablet, laptop, TV, etc…).

So lets do the visual acuity math for smartphone like displays;

We see (from the above chart) that for an iPhone 6/7 Plus (5.5” display) any viewing distance above approx. 50 cm, a normal eye (i.e., 20/20 vision) would become insensitive to video formats better than 480p (1 – 2.3 Mbps). In my case, my typical viewing distance is ca. 30+ cm and I might get some benefits from 720p (2.3 – 4.5 Mbps) as opposed to 480p. Sadly my sight is worse than the norm of 20/20 (i.e., old! and let’s just leave it at that!) and thus I remain insensitive to the resolution improvements 720p would provide. If you have a device with at or below 4” display (e.g., iPhone 5 & 4) the viewing distance where normal eyes become insensitive is ca. 30+ cm.

All in all, it would appear that unless cellular user equipment, and the way these are being used, changes very fundamentally the 480p to 720p range might be more than sufficient.

If this is true, it also implies that a cellular 5G user on a reliable good network connection would need no more than 4 – 5 Mbps to get an optimum viewing (and streaming) experience (i.e., 720p resolution).

The 5 Mbps streaming speed, for optimal viewing experience, is very far away from our 5G 1-Gbps promise (x200 times less)!

Assuming instead of streaming we want to download movies, assuming we lots of memory available on our device … hmmm … then a typical 480p movie could be download in ca. 10 – 20 seconds at 1Gbps, a 720p movie between 30 and 40 seconds, and a 1080p would take 40 to 50 seconds (and likely a waste due to limitations to your vision).

However with a 5G promise of super reliable ubiquitous coverage, I really should not need to download and store content locally on storage that might be pretty limited.

Downloads to cellular devices or home storage media appears somewhat archaic. But would benefit from the promised 5G speeds.

I could share my 5G-Gbps with other users in my surrounding. A typical Western European household in 2020 (i.e., about the time when 5G will launch) would have 2.17 inhabitants (2.45 in Central Eastern Europe), watching individual / different real-time content would require multiples of the bandwidth of the optimum video resolution. I could have multiple video streams running in parallel, to likely the many display devices that will be present in the consumer’s home, etc… Still even at fairly high video streaming codecs, a consumer would be far away from consuming the 1-Gbps (imagine if it was 10 Gbps!).

Okay … so video consumption, independent of mobile or fixed devices, does not seem to warrant anywhere near the 1 – 10 Gbps per connection.

Surely EU Commission wants it!

EU Member States have their specific broadband coverage objectives – namely: ‘Universal Broadband Coverage with speeds at least 30 Mbps by 2020’ (i.e, will be met by LTE!) and ‘Broadband Coverage of 50% of households with speeds at least 100 Mbps by 2020 (also likely to be met with LTE and fixed broadband means’.

The European Commission’s “Broadband Coverage in Europe 2015” reports that 49.2% of EU28 Households (HH) have access to 100 Mbps (i.e., 50.8% of all HH have access to less than 100 Mbps) or more and 68.2% to broadband speeds above 30 Mbps (i.e., 32.8% of all HH with access to less than 30 Mbps). No more than 20.9% of HH within EU28 have FTTP (e.g., DE 6.6%, UK UK 1.4%, FR 15.5%, DK 57%).

The EU28 average is pretty good and in line with the target. However, on an individual member state level, there are big differences. Also within each of the EU member states great geographic variation is observed in broadband coverage.

Interesting, the 5G promises to per user connection speed (1 – 10 Gbps), coverage (user perceived 100%) and reliability (user perceived 100%) is far more ambitious that the broadband coverage objectives of the EU member states.

So maybe indeed we could make the EU Commission and Member States happy with the 5G Throughput promise. (this point should not be underestimated).

Web browsing experience … more throughput and all will be okay myth!

So … Surely, the Gbps speeds can help provide a much faster web browsing / surfing experience than what is experienced today for LTE and for the fixed broadband? (if there ever was a real Myth!).

In other words the higher the bandwidth, the better the user’s web surfing experience should become.

While bandwidth (of course) is a factor in customers browsing experience, it is but a factor out of several that also governs the customers real & perceived internet experience; e.g., DNS Lookups (this can really mess up user experience), TCP, SSL/TLS negotiation, HTTP(S) requests, VPN, RTT/Latency, etc…

An excellent account of these various effects is given by Jim Getty’s “Traditional AQM is not enough” (i.e., AQM: Active Queue Management). Measurements (see Jim Getty’s blog) strongly indicates that at a given relative modest bandwidth (>6+ Mbps) there is no longer any noticeable difference in page load time. In my opinion there are a lot of low hanging fruits in network optimization that provides large relative improvements in customer experience than network speed alone..

Thus one might carefully conclude that, above a given throughput threshold it is unlikely that more throughput would have a significant effect on the consumers browsing experience.

More work needs to be done in order to better understand the experience threshold after which more connection bandwidth has diminishing returns on the customer’s browsing experience. However, it would appear that 1-Gbps 5G connection speed would be far above that threshold. An average web page in 2016 was 2.2 MB which from an LTE speed perspective would take 568 ms to load fully provided connection speed was the only limitation (which is not the case). For 5G the same page would download within 18 ms assuming that connection speed was the only limitation.

Downloading content (e.g., FTTP).

Now we surely are talking. If I wanted to download the whole Library of the US Congress (I like digital books!), I am surely in need for speed!?

The US Congress have estimated that the whole print collection (i.e., 26 million books) adds up to 208 terabytes.Thus assuming I have 208+ TB of storage, I could within 20+ (at 1 Gbps) to 2+ (at 20 Gbps) days download the complete library of the US Congress.

In fact, at 1 Gbps would allow me to download 15+ books per second (assuming 1 book is on average 3oo pages and formatted at 600 DPI TIFF which is equivalent to ca. 8 Mega Byte).

So clearly, for massive file sharing (music, videos, games, books, documents, etc…), the 5G speed promise is pretty cool.

Though, it does assume that consumers would continue to see a value in storing information locally on their personally devices or storage medias. The idea remains archaic, but I guess there will always be renaissance folks around.

What about 50 Mbps everywhere (at a 10 ms latency level)?

Firstly, providing a customers with a maximum latency of 10 ms with LTE is extremely challenging. It would be highly unlikely to be achieved within existing LTE networks, particular if transmission retrials are considered. From OpenSignal December 2016 measurements shown in the chart below, for urban areas across Europe, the LTE latency is on average around 41±9 milliseconds. Considering the LTE latency variation we are still 3 – 4 times away from the 5G promise. The country average would be higher than this. Clearly this is one of the reasons why the NGMN whitepaper proposes a new air-interface. As well as some heavy optimization and redesigns in general across our Telco networks.

The urban LTE persistent experience level is very reasonable but remains lower than the 5G promise of 50 Mbps, as can be seen from the chart below;

The LTE challenge however is not the customer experience level in urban areas but on average across a given geography or country. Here LTE performs substantially worse (also on throughput) than what the NGMN whitepaper’s ambition is. Let us have a look at the current LTE experience level in terms of LTE coverage and in terms of (average) speed.

Based on European Commission “Broadband Coverage in Europe 2015” we observe that on average the total LTE household coverage is pretty good on an EU28 level. However, the rural households are in general underserved with LTE. Many of the EU28 countries still lack LTE consistent coverage in rural areas. As lower frequencies (e.g., 700 – 900 MHz) becomes available and can be overlaid on the existing rural networks, often based on 900 MHz grid, LTE rural coverage can be improved greatly. This economically should be synchronized with the normal modernization cycles. However, with the current state of LTE (and rural network deployments) it might be challenging to reach a persistent level of 50 Mbps per connection everywhere. Furthermore, the maximum 10 millisecond latency target is highly unlikely to be feasible with LTE.

In my opinion, 5G would be important in order to uplift the persistent throughput experience to at least 50 Mbps everywhere (including cell edge). A target that would be very challenging to reach with LTE in the network topologies deployed in most countries (i.e., particular outside urban/dense urban areas).

The customer experience value to the general consumer of a maximum 10 millisecond latency is in my opinion difficult to assess. At a 20 ms response time would most experiences appear instantaneous. The LTE performance of ca. 40 ms E2E external server response time, should satisfy most customer experience use case requirements beside maybe VR/AR.

Nevertheless, if the 10 ms 5G latency target can be designed into the 5G standard without negative economical consequences then that might be very fine as well.

Another aspect that should be considered is the additional 5G market potential of providing a persistent 50 Mbps service (at a good enough & low variance latency). Approximately 70% of EU28 households have at least a 30 Mbps broadband speed coverage. If we look at EU28 households with at least 50 Mbps that drops to around 55% household coverage. With the 100% (perceived)coverage & reliability target of 5G as well as 50 Mbps everywhere, one might ponder the 30% to 45% potential of households that are likely underserved in term of reliable good quality broadband. Pending the economics, 5G might be able to deliver good enough service at a substantial lower cost compared more fixed centric means.

Finally, following our expose on video streaming quality, clearly a 50 Mbps persistent 5G connectivity would be more than sufficient to deliver a good viewing experience. Latency would be less of an issue in the viewing experience as longs as the variation in the latency can be kept reasonable low.

Acknowledgement

I greatly acknowledge my wife Eva Varadi for her support, patience and understanding during the creative process of creating this Blog.

WORTHY 5G & RELATED READS.

- “NGMN 5G White Paper” by R.El Hattachi & J. Erfanian (NGMN Alliance, February 2015).

- “Understanding 5G: Perspectives on future technological advancement in mobile” by D. Warran & C. Dewar (GSMA Intelligence December 2014).

- “Fundamentals of 5G Mobile Networks” by J. Rodriguez (Wiley 2015).

- “The 5G Myth: And why consistent connectivity is a better future” by William Webb (2016).

- “Software Networks: Virtualization, SDN, 5G and Security”by G. Pujolle (Wile 2015).

- “Large MiMo Systems” by A. Chockalingam & B. Sundar Rajan (Cambridge University Press 2014).

- “Millimeter Wave Wireless Communications” by T.S. Rappaport, R.W. Heath Jr., R.C. Daniels, J.N. Murdock (Prentis Hall 2015).

- “The Limits of Human Vision” by Michael F. Deering (Sun Microsystems).

- “Quad HD vs 1080p vs 720p comparison: here’s what’s the difference” by Victor H. (May 2014).

- “Broadband Coverage in Europe 2015: Mapping progress towards the coverage objectives of the Digital Agenda” by European Commission, DG Communications Networks, Content and Technology (2016).

Good read thanks- might be useful to also factor in the impact of spectrum choices (including pass band and channel plans and guard bands) and technology options on user and IOT device performance and cost as this continues to have a large impact on network economics and network added vale. Also spare a though for the satellite industry where technology innovation is reducing opex and capex whereas as you have pointed out technology innovation in mobile broadband coupled to network densification is increasing capex and opex. 5G as an ultra sparse network seems to be a much more appealing proposition- enabling technologies include fractional beam width antennas already widely used in Ka band HTS satellites and angular protection used in single chip automotive radar- these two technologies together make Ka band and E band link budgets much more interesting both in terms of date reach and power efficiency- with all best wishes- Geoff Varrall- RTT

Thank you Geoff! Spectrum is definitely on my agenda (for a Chapter) as well satellites or alternative coverage solutions (e.g., drones a la Facebook”s Eagle or Aquila). Satellites, even LEO, as you of course know, are great at providing very high quality downlink speeds but suffers from the return link challenge. That usually result in adaptation to the device ecosystem and (usually) breaks the scale advantage of the mass market device ecosystem. If super low latencies (<10 ms) are required the satellite based solutions might not work very well. This said we definitely need to keep an eye on satellite systems (particular LEOs) as their cost are dramatically reduced and antenna technology improves further. So great suggestion!

I am generally more positive about next generation satellite link budgets particularly when signals are sent and received from and to multiple constellations – SES and O3B MEO/GSO being one example and 40 dBi of isotropic gain helps as well plus you get frequency reuse across the constellations -rethinking 5G as progressive point to point with back haul in band and satellite as a back haul or remote access option makes sense particularly if you look at the existing band plans at 28 and 38 GHz and the E band FDD bands either side of automotive radar – these are on a channel raster of 19 by 250 MHz with 125 MHz guard bands- ideal for 5G but this would mean the 5G industry would need to work with the satellite industry on standards which is presently unlikely – amazing success today from Space X with their Iridium launch – ten satellites on one lift and you get the rocket back- amazing stuff which the 5G community seem barely aware of – we are running a (free) 45 minute webinar on 5G and satellite this afternoon 4.00 GMT- join us if you can

https://collaborata.clickmeeting.com/137737362/registration_required

or via our home page http://www.rttonline.com#

all best wishes geoff

Early in the blog Dr. Kim writes:

– Backhaul & backbone transport transformation needed to support the 5G quantum leap in performance has been largely ignored.

– Relative weak on fixed – mobile convergence.

My comments: especially the last 10 mile backhaul could be expensive per end point without new technologies to unleash more wavelength on single fibers in a cost efficient manner. NG-PON2 standard has defined the goals, but few technologies are promising to make it possible in cost efficient manner. The NG-PON2 goals are 10G & 256 users per access backhaul fiber. On the optical layer in the backhaul access network, new low cost optical transceivers may have to promise 4 wavelength tunability (without expensive filters), extensive reach of 40 km, delivering 10 G for sharing between end-points for solving the issue that Dr. Kim is rightly pointing to re. backhaul support for 5G.

This is an impressive unpicking of the 5G hyberbole. In particular, your arguments about 1 ms latency. VR/AR is a very limited use case, and there is no way the entire global network is going to be rebuilt (with edge clouds and everything) on the business case of VR users. By the way, VR users are blinded and can’t be very mobile with a VR helmet on. Perhaps AR users. Not mentioned in this blog post, but the 5G vision documents mention driverless vehicles as another use case for 1 ms latency. This would be nice to have, but a driverless car will have autonomous intelligence for collision avoidance (and a car travelling at 140 km/h will only move 3.9 cm in 1 ms).

However, my interpretation after reading the various 5G vision papers is that LTE (with future upgrades) will continue to be used as the backbone for wide area coverage in the 5G vision, and that 5G/mmWaves will primarily be about massive capacity upgrades in dense urban areas. (On my own blog mobileforesight Dot com, I have written a 5G unpicking that is very similar to your arguments.)

Thank you Jonas! It is clear from the original vision paper that 5G is more than radio although all the goodies being promised are largely radio/air interface related. From the perspective of Shannon there appear is little more we can do with the single channel. Thus any antenna technology & more spectrum we throw at the bandwidth / throughput demand could be done with LTE as well as with 5G (apart from some minor finesse squeezing the last out of Shannon).Of course super low latency with LTE is not possible given the current TTI limit (e.g., 1 ms) and some of the other timescales involved in the latency budget.

My position is that if 5G is only limited to particular areas of super dense small cells, the technology will be largely irrelevant (at least the air interface part) and I cannot believe that the current funding and efforts are completely justified.

5G needs to be relevant to the mass market providing a substantially better customer experience than with today’s LTE. If not the case, then in my opinion the technology will become irrelevant.

This said, I do suspect that LTE Pro (or LTE X) will be renamed to 5G for the masses and with some clever marketing around the topic the industry comes out at the right side of this 😉

Anyway, as many of the 5G experts “in the Box” are pointing out, 5G is not only about Air interface but about so many other things … e.g., core network slicing & dicing, cloudification & virtualization of network functions, orchestration, automation, edge computing (as we believe in the holy 1ms) etc… etc ….

Nice read and good to see that the current ‘given’ beliefs of 5G is being challenged. Despite the technical ambitions that some have I believe that we still need to try to understand what would be the relevant 5G business targets and as you state “what would 5G as a technology bring, in additional Revenues, in Churn reduction, Capex & Opex (absolute) Efficiencies, etc”

To date, the literature on 5G related business models is still in its infancy. However, some observations can be made based on existing literature. Zhang et. al (2015) Have discussed a cloud-assisted business model. They pay attention to densification of the network by providing open access to small cells with the aim of bringing value to customers through flexibility, security, efficiency, scalability, low costs, and, also profitability when there is small cell accessibility. there are still a lot of question marks looking at the economic analysis side of it from the operator point of view.

Andrews et. al (2014) Have presented a collaborative business model. They discuss network infrastructure sharing in a heterogeneous (HetNet) environment for maximizing users’ data rate and creating more revenue. Rasheed et. al (2015) Discussed incentive and reputation business model to support cooperative network architecture and revenue generation. As the benefits of the discussed business model, they see possibilities for upgrading performance, connections, capacity, coverage of the network, quality of service, efficient network resource utilization (also from environmental perspective) and new cooperative or innovative services. Rasheed

et. al (2015) discussed also brokerage business model that bring different kind of buyers and sellers together through auctions to use network services.

and last but not leat el Hattachi & Erfanian (2015) discuss asset provider, connectivity provider and partner-service provider business models. The role of these business models is to support different kinds of customers and various levels of partnership activities. The value of these models would be the possibility to share infrastructure, receive particular network capabilities, enable software-oriented capabilities, provide anything as a service, achieve real-time network sharing, provide best IP connectivity, support self-configuration of the network and enable the provision of combined service offerings.

As the above examples show we lack a coherent view on 5G’s business model.

Pingback: 5G Economics – The Numbers (Appendix X). | techneconomyblog

Pingback: Kerrisdale Capital Short Report - Straight Path Communications Inc (STRP)

Pingback: 5G Standalone – European Demand & Expectations (Part I). | techneconomyblog