“From an economic and customer experience standpoint, deploying stratospheric drones may be significantly more cost effective than establishing extra terrestrial infrastructures”.

This article, in a different and somewhat shorter format, has also been published by New Street Research under the title “Stratospheric drones: A game changer for rural networks?”. You will need to register with New Street Research to get access.

As a mobile cellular industry expert and a techno-economist, the first time I was presented with the concept of stratospheric drones, I feel the butterflies in my belly. That tingling feeling that I was seeing something that could be a huge disruptor of how mobile cellular networks are being designed and built. Imagine getting rid of the profitability-challenged rural cellular networks (i.e., the towers, the energy consumption, the capital infrastructure investments), and, at the same time, offering much better quality to customers in rural areas than is possible with the existing cellular network we have deployed there. A technology that could fundamentally change the industry’s mobile cellular cost structure for the better at a quantum leap in quality and, in general, provide economical broadband services to the unconnected at a fraction of the cost of our traditional ways of building terrestrial cellular coverage.

Back in 2015, I got involved with Deutsche Telekom AG Group Technology, under the leadership of Bruno Jacobfeuerborn, in working out the detailed operational plans, deployment strategies, and, of course, the business case as well as general economics of building a stratospheric cellular coverage platform from scratch with the UK-based Stratospheric Platform Ltd [2] in which Deutsche Telekom is an investor. The investment thesis was really in the way we expected the stratospheric high-altitude platform to make a large part of mobile operators’ terrestrial rural cellular networks obsolete and how it might strengthen mobile operator footprints in countries where rural and remote coverage was either very weak or non-existing (e.g., The USA, an important market for Deutsche Telekom AG).

At the time, our thoughts were to have an operational stratospheric coverage platform operationally by 2025, 10 years after kicking off the program, with more than 100 high-altitude platforms covering a major Western European country serving rural areas. As it so often is, reality is unforgiving, as it often is with genuinely disruptive ideas. Getting to a stage of deployment and operation at scale of a high-altitude platform is still some years out due to the lack of maturity of the flight platform, including regulatory approvals for operating a HAP network at scale, increasing the operating window of the flight platform, fueling, technology challenges with the advanced antenna system, being allowed to deployed terrestrial-based cellular spectrum above terra firma, etc. Many of these challenges are progressing well, although slowly.

Globally, various companies are actively working on developing stratospheric drones to enhance cellular coverage. These include aerospace and defense giants like Airbus, advancing its Zephyr drone, and BAE Systems, collaborating with Prismatic for their PHASA-35 UAV. One of the most exciting HAPS companies focusing on developing world-leading high-altitude aircraft that I have come across during my planning work on how to operationalize a Stratospheric cellular coverage platform is the German company Leichtwerk AG, which has their hydrogen-fueled StratoStreamer as well as a solar-powered platform under development with the their StratoStreamer being close to production-ready. Telecom companies like Deutsche Telekom AG and BT Group are experimenting with hydrogen-powered drones in partnership with Stratospheric Platforms Limited. Through its subsidiary HAPSMobile, SoftBank is also a significant player with its Sunglider project. Additionally, entities like China Aerospace Science and Technology Corporation and Cambridge Consultants contribute to this field by co-developing enabling technologies (e.g., advanced phased-array antenna, fuel technologies, material science, …) critical for the success and deployability of high-altitude platforms at scale, aiming to improve connectivity in rural, remote, and underserved areas.

The work on integrating High Altitude Platform (HAP) networks with terrestrial cellular systems involves significant coordination with international regulatory bodies like the International Telecommunication Union Radiocommunication Sector (ITU-R) and the World Radiocommunication Conference (WRC). This process is crucial for securing permission to reuse terrestrial cellular spectrum in the stratosphere. Key focus areas include negotiating the allocation and management of frequency bands for HAP systems, ensuring they don’t interfere with terrestrial networks. These efforts are vital for successfully deploying and operating HAP systems, enabling them to provide enhanced connectivity globally, especially in rural areas where terrestrial cellular frequencies are already in use and remote and underserved regions. At the latest WRC-2023 conference, Softbank successfully gained approval within the Asia-Pacific region to use mobile spectrum bands for stratospheric drone-based mobile broadband cellular services.

Most mobile operators have at least 50% of their cellular network infrastructure assets in rural areas. While necessary for providing the coverage that mobile customers have come to expect everywhere, these sites carry only a fraction of the total mobile traffic. Individually, rural sites have poor financial returns due to their proportional operational and capital expenses.

In general, the Opex of the cellular network takes up between 50% and 60% of the Technology Opex, and at least 50% of that can be attributed to maintaining and operating the rural part of the radio access network. Capex is more cyclical than Opex due to, for example, the modernization of radio access technology. Nevertheless, over a typical modernization cycle (5 to 7 years), the rural network demands a little bit less but a similar share of Capex overall as for Opex. Typically, the Opex share of the rural cellular network may be around 10% of the corporate Opex, and its associated total cost is between 12% and 15% of the total expenses.

The global telecom towers market size in 2023 is estimated at ca. 26+ billion euros, ca. 2.5% of total telecom turnover, with a projected growth of CAGR 3.3% from now to 2030. The top 10 Tower management companies manage close to 1 million towers worldwide for mobile CSPs. Although many mobile operators have chosen to spin off their passive site infrastructure, there are still some remaining that may yet to spin off their cellular infrastructure to one of many Tower management companies, captive or independent, such as American Tower (224,019+ towers), Cellnex Telecom (112,737+ towers), Vantage Towers (46,100+ towers), GD Towers (+41,600 towers), etc…

IMAGINE.

Focusing on the low- or no-profitable rural cellular coverage.

Imagine an alternative coverage technology to the normal cellular one all mobile operators are using that would allow them to do without the costly and low-profitable rural cellular network they have today to satisfy their customers’ expectations of high-quality ubiquitous cellular coverage.

For the alternative technology to be attractive, it would need to deliver at least the same quality and capacity as the existing terrestrial-based cellular coverage for substantially better economics.

If a mobile operator with a 40% EBITDA margin did not need its rural cellular network, it could improve its margin by a sustainable 5% and increase its cash generation in relative terms by 50% (i.e., from 0.2×Revenue to 0.3×Revenue), assuming a capex-to-revenue ratio of 20% before implementing the technology being reduced to 15% after due to avoiding modernization and capacity investments in the rural areas.

Imagine that the alternative technology would provide a better cellular quality to the consumer for a quantum leap reduction of the associated cost structure compared to today’s cellular networks.

Such an alternative coverage technology might also impact the global tower companies’ absolute level of sustainable tower revenues, with a substantial proportion of revenue related to rural site infrastructure being at risk.

TERRESTRIAL CELLULAR RURAL COVERAGE – A MATTER OF POOR ECONOMICS.

When considering the quality we experience in a terrestrial cellular network, a comprehensive understanding of various environmental and physical factors is crucial to predicting the signal quality accurately. All these factors generally work against cellular signal propagation regarding how far the signal can reach from the transmitting cellular tower and the achievable quality (e.g., signal strength) that a customer can experience from a cellular service.

Firstly, the terrain plays a significant role. Rural landscapes often include varied topographies such as hills, valleys, and flat plains, each affecting signal reach differently. For instance, hilly or mountainous areas may cause signal shadowing and reflection, while flat terrains might offer less obstruction, enabling signals to travel further.

At higher frequencies (i.e., above 1 GHz), vegetation becomes an increasingly critical factor to consider. Trees, forests, and other dense foliage can absorb and scatter radio waves, attenuating signals. The type and density of vegetation, along with seasonal changes like foliage density in summer versus winter, can significantly impact signal strength.

The height and placement of transmitting and receiving antennas are also vital considerations. In rural areas, where there are fewer tall buildings, the height of the antenna can have a pronounced effect on the line of sight and, consequently, on the signal coverage and quality. Elevated antennas mitigate the impact of terrain and vegetation to some extent.

Furthermore, the lower density of buildings in rural areas means fewer reflections and less multipath interference than in urban environments. However, larger structures, such as farm buildings or industrial facilities, must be factored in, as they can obstruct or reflect signals.

Finally, the distance between the transmitter and receiver is fundamental to signal propagation. With typically fewer cell towers spread over larger distances, understanding how signal strength diminishes with distance is critical to ensuring reliable coverage at a high quality, such as high cellular throughput, as the mobile customer expects.

The typical way for a cellular operator to mitigate the environmental and physical factors that inevitably result in loss of signal strength and reduced cellular quality (i.e., sub-standard cellular speed) is to build more sites and thus incur increasing Capex and Opex in areas that in general will have poor economical payback associated with any cellular assets. Thus, such investments make an already poor economic situation even worse as the rural cellular network generally would have very low utilization.

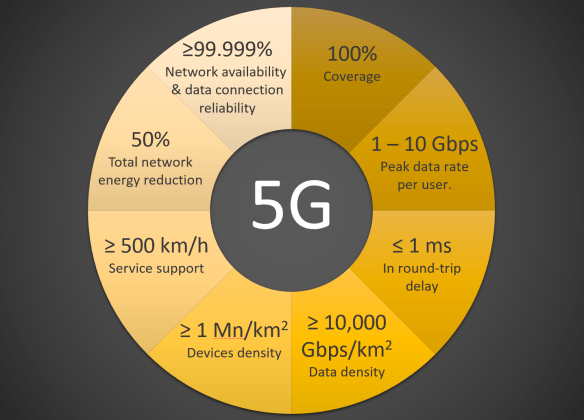

As our alternative technology also would need to provide at least the same quality and capacity it is worth exploring what can be expected in terms of rural terrestrial capacity. In general, we have that the cellular capacity (and quality) can be written as (also shown in Figure 2 above):

Throughput (in Mbps) =

Spectral Bandwidth in MHz ×

Effective Spectral Efficiency in Mbps/MHz/Cell ×

Number of Cells

We need to keep in mind that an additional important factor when considering quality and capacity is that the higher the operational frequency, the lower the radius (all else being equal). Typically, we can improve the radius at higher frequencies by utilizing advanced antenna beam forming, that is, concentrate the radiated power per unit coverage area, which is why you will often hear that the 3.6 GHz downlink coverage radius is similar to that of 1800 MHz (or PCS). This 3.6 GHz vs. 1.8 GHz coverage radius comparison is made when not all else is equal. Comparing a situation where the 1800 MHz (or PCS) radiated power is spread out over the whole coverage area compared to a coverage situation where the 3.6 GHz (or C-band in general) solution makes use of beamforming, where the transmitted energy density is high, allowing to reach the customer at a range that would not be possible if the 3.6 GHz radiated power would have been spread out over the cell like the example of the 1800 MHz.

As an example, take an average Western European rural 5G site with all cellular bands between 700 and 2100 MHz activated. The site will have a total of 85 MHz DL and 75 MHz UL, with a 10 MHz difference between DL and UL due to band 38 Supplementary Downlink SDL) operational on the site. In our example, we will be optimistic and assume that the effective spectral efficiency is 2 Mbps per MHz per cell (average over all bands and antenna configurations), which would indicate a fair amount of 4×4 and 8×8 MiMo antenna systems deployed. Thus, the unit throughput we would expect to be supplied by the terrestrial rural cell would be 170 Mbps (i.e., 85 MHz × 2.0 Mbps/MHz/Cell). With a rural cell coverage radius between 2 and 3 km, we then have an average throughput per square kilometer of 9 Mbps/km2. Due to the low demand and high-frequency bandwidth per active customer, DL speeds exceeding 100+ Mbps should be relatively easy to sustain with 5G standalone, with uplink speeds being more compromised due to larger coverage areas. Obviously, the rural quality can be improved further by deploying advanced antenna systems and increasing the share of higher-order MiMo antennas in general, as well as increasing the rural site density. However, as already pointed out, this would not be an economically reasonable approach.

THE ADVANTAGE OF SEEING FROM ABOVE.

It may sound silly to talk about an alternative coverage technology that could replace the need for the cellular tower infrastructure that today is critical for providing mobile broadband coverage to, for example, rural areas. What alternative coverage technologies should we consider?

If, instead of relying on terrestrial-based tower infrastructure, we could move the cellular antenna and possibly the radio node itself to the sky, we would have a situation where most points of the ground would be in the line of sight to the “antenna-in-the-sky.” The antenna in the sky idea is a game changer in terms of coverage itself compared to conventional terrestrial cellular coverage, where environmental and physical factors dramatically reduce signal propagation and signal quality.

The key advantage of an antenna in the sky (AIS) is that the likelihood of a line-of-sight to a point on the ground is very high compared to establishing a line-of-sight for terrestrial cellular coverage that, in general, would be very low. In other words, the cellular signal propagation from an AIS closely approximates that of free space. Thus, all the various environmental signal loss factors we must consider for a standard terrestrial-based mobile network do not apply to our antenna in the sky.

Over the last ten years, we have gotten several technology candidates for our antenna-in-the-sky solution, aiming to provide terrestrial broadband services as a substitute, or enhancement, for terrestrial mobile and fixed broadband services. In the following, I will describe two distinct types of antenna-in-the-sky solutions: (a) Low Earth Orbit (LEO) satellites, operating between 500 to 2000 km above Earth, that provide terrestrial broadband services such as we know from Starlink (SpaceX), OneWeb (Eutelsat Group), and Kuiper (Amazon), and (b) So-called, High Altitude Platforms (HAPS), operating at altitudes between 15 to 30 km (i.e., in the stratosphere). Such platforms are still in the research and trial stages but are very promising technologies to substitute or enhance rural network broadband services. The HAP is supposed to be unmanned, highly autonomous, and ultimately operational in the stratosphere for an extended period (weeks to months), fueled by green hydrogen and possibly solar. The high-altitude platform is thus also an unmanned aerial vehicle (UAV), although I will use the term stratospheric drone and HAP interchangeably in the following.

Low Earth Orbit (LEO) satellites and High Altitude Platforms (HAPs) represent two distinct approaches to providing high-altitude communication and observation services. LEO satellites, operating between 500 km and 2,000 km above the Earth, orbit the planet, offering broad global coverage. The LEO satellite platform is ideal for applications like satellite broadband internet, Earth observation, and global positioning systems. However, deploying and maintaining these satellites involves complex, costly space missions and sophisticated ground control. Although, as SpaceX has demonstrated with the Starlink LEO satellite fixed broadband platform, the unitary economics of their satellites significantly improve by scale when the launch cost is also considered (i.e., number of satellites).

Figure 4 illustrates a non-terrestrial network architecture consisting of a Low Earth Orbit (LEO) satellite constellation providing fixed broadband services to terrestrial users. Each hexagon represents a satellite beam inside the larger satellite coverage area. Note that, in general, there will be some coverage overlap between individual satellites, ensuring a continuous service including interconnected satellites. The user terminal (UT) dynamically aligns itself, aiming at the best quality connection provided by the satellites within the UT field of vision.

On the other hand, HAPs, such as unmanned (autonomous) stratospheric drones, operate at altitudes of approximately 15 km to 30 km in the stratosphere. Unlike LEO satellites, the stratospheric drone can hover or move slowly over specific areas, often geostationary relative to the Earth’s surface. This characteristic makes them more suitable for localized coverage tasks like regional broadband, surveillance, and environmental monitoring. The deployment and maintenance of the stratospheric drones are managed from the Earth’s surface and do not require space launch capabilities. Furthermore, enhancing and upgrading the HAPs is straightforward, as they will regularly be on the ground for fueling and maintenance. Upgrades are not possible with an operational LEO satellite solution where any upgrade would have to wait on a subsequent generation and new launch.

Figure 5 illustrates the high-level network architecture of an unmanned autonomous stratospheric drone-based constellation providing terrestrial cellular broadband services to terrestrial mobile users delivered to their normal 5G terminal equipment. Each hexagon represents a beam arising from the phased-array antenna integrated into the drone’s wingspan. To deliver very high-availability services to a rural area, one could assign three HAPs to cover a given area. The drone-based non-terrestrial network is drawn consistent with the architectural radio access network (RAN) elements from Open RAN, e.g., Radio Unit (RU), Distributed Unit (DU), and Central Unit (CU). It should be noted that the whole 5G gNB (the 5G NodeB), including the CU, could be integrated into the stratospheric drone, and in fact, so could the 5G standalone (SA) packet core, enabling full private mobile 5G networks for defense and disaster scenarios or providing coverage in very remote areas with little possibility of ground-based infrastructure (e.g., the arctic region, or desert and mountainous areas).

The unique advantage of the HAP operating in the stratosphere is (1) The altitude is advantageous for providing wider-area cellular coverage with a near-ideal quality above and beyond what is possible with conventional terrestrial-based cellular coverage because of very high line-of-sight likelihood due to less environment and physical issues that substantially reduces the signal propagation and quality of a terrestrial coverage solution, and (2) More stable atmospheric conditions characterize the stratosphere compared to the troposphere below it. This stability allows the stratospheric drone to maintain a consistent position and altitude with less energy expenditure. The stratosphere offers more consistent and direct sunlight exposure for a solar-powered HAP with less atmospheric attenuation. Moreover, due to the thinner atmosphere at stratospheric altitudes, the stratospheric drone will experience a lower air resistance (drag), increasing the energy efficiency and, therefore, increasing the operational airtime.

Each of these solutions has its unique advantages and limitations. LEO satellites provide extensive coverage but come with higher operational complexities and costs. HAPs offer more focused coverage and are easier to manage, but they need the global reach of LEO satellites. The choice between these two depends on the specific requirements of the intended application, including coverage area, budget, and infrastructure capabilities.

In an era where digital connectivity is indispensable, stratospheric drones could emerge as a game-changing technology. These unmanned (autonomous) drones, operating in the stratosphere, offer unique operational and economic advantages over terrestrial networks and are even seen as competitive alternatives to low earth orbit (LEO) satellite networks like Starlink or OneWeb.

STRATOSPHERIC DRONES VS TERRESTRIAL NETWORKS.

Stratospheric drones positioned much closer to the Earth’s surface than satellites, provide distinct signal strength and latency benefits. The HAP’s vantage point in the stratosphere (around 20 km above the Earth) ensures a high probability of line-of-sight with terrestrial user devices, mitigating the adverse effects of terrain obstacles that frequently challenge ground-based networks. This capability is particularly beneficial in rural areas in general and mountainous or densely forested areas, where conventional cellular towers struggle to provide consistent coverage.

Why the stratosphere? The stratosphere is the layer of Earth’s atmosphere located above the troposphere, which is the layer where weather occurs. The stratosphere is generally characterized by stable, dry conditions with very little water vapor and minimal horizontal winds. It is also home to the ozone layer, which absorbs and filters out most of the Sun’s harmful ultraviolet radiation. It is also above the altitude of commercial air traffic, which typically flies at altitudes ranging from approximately 9 to 12 kilometers (30,000 to 40,000 feet). These conditions (in addition to those mentioned above) make operating a stratospheric platform very advantageous.

Figure 6 illustrates the coverage fundamentals of (a) a terrestrial cellular radio network with the signal strength and quality degrading increasingly as one moves away from the antenna and (b) the terrestrial coverage from a stratospheric drone (antenna in the sky) flying at an altitude of 15 to 30 km. The stratospheric drone, also called a High-Altitude Platform (HAP), provides near-ideal signal strength and quality due to direct line-of-sight (LoS) with the ground, compared to the signal and quality from a terrestrial cellular site that is influenced by its environment and physical factors and the fact that LoS is much less likely in a conventional terrestrial cellular network. It is worth keeping in mind that the coverage scenarios where a stratospheric drone and a low earth satellite may excel in particular are in rural areas and outdoor coverage in more dense urban areas. In urban areas, the clutter, or environmental features and objects, will make line-of-site more challenging, impacting the strength and quality of the radio signals.

From an economic and customer experience standpoint, deploying stratospheric drones may be significantly more cost-effective than establishing extensive terrestrial infrastructure, especially in remote or rural areas. The setup and operational costs of cellular towers, including land acquisition, construction, and maintenance, are substantially higher compared to the deployment of stratospheric drones. These aerial platforms, once airborne, can cover vast geographical areas, potentially rendering numerous terrestrial towers redundant. At an operating height of 20 km, one would expect a coverage radius ranging from 20 km up to 500 km, depending on the antenna system, application, and business model (e.g., terrestrial broadband services, surveillance, environmental monitoring, …).

The stratospheric drone-based coverage platform, and by platform, I mean the complete infrastructure that will replace the terrestrial cellular network, will consist of unmanned autonomous drones with a considerable wingspan (e.g., 747-like of ca. 69 meters). For example, European (German) Leichtwerk’s StratoStreamer has a wingspan of 65 meters and a wing area of 197 square meters with a payload of 120+ kg (note: in comparison a Boing 747 has ca. 500+ m2 wing area but its payload is obviously much much higher and in the range of 50 to 60 metric tons). Leichtwerk AG work closely together with AESA in order to achieve the European Union Aviation Safety Agency (EASA) type certificate that would allow the HAPS to integrate into civil airspace (see refs. [34] for what that means).

An advanced antenna system is positioned under the wings (or the belly) of the drone. I will assume that the coverage radius provided by a single drone is 50 km, but it can dynamically be made smaller or larger depending on the coverage scenario and use case. The drone-based advanced antenna system breaks up the coverage area (ca. six thousand five hundred plus square kilometers) into 400 patches (i.e., a number that can be increased substantially), averaging approx. 16 km2 per patch and a radius of ca. 2.5 km. Due to its near-ideal cellular link budget, the effective spectral efficiency is expected to be initially around 6 Mbps per MHz per cell. Additionally, the drone does not have the same spectrum limitations as a rural terrestrial site and would be able to support frequency bands in the downlink from ~900 MHz up to 3.9 GHz (and possibly higher, although likely with different antenna designs). Due to the HAP altitude, the Earth-to-HAP uplink signal will be limited to a lower frequency spectrum to ensure good signal quality is being received at the stratospheric antenna. It is prudent to assume a limit of 2.1 GHz to possibly 2.6 GHz. All under the assumption that the stratospheric drone operator has achieved regulatory approval for operating the terrestrial cellular spectrum from their coverage platform. It should be noted that today, cellular frequency spectrum approved for terrestrial use cannot be used at an altitude unless regulatory permission has been given (more on this later).

Let’s look at an example. We would need ca. 46 drones to cover the whole of Germany with the above-assumed specifications. Furthermore, if we take the average spectrum portfolio of the 3 main German operators, this will imply that the stratospheric drone could be functioning with up to 145 MHz in downlink and at least 55 MHz uplink (i.e., limiting UL to include 2.1 GHz). Using the HAP DL spectral efficiency and coverage area we get a throughput density of 70+ Mbps/km2 and an effective rural cell throughput of 870 Mbps. In terrestrial-based cellular coverage, the contribution to quality at higher frequencies is rapidly degrading as a function of the distance to the antenna. This is not the case for HAP-based coverage due to its near-ideal signal propagation.

In comparison, the three incumbent German operators have on average ca. 30±4k sites per operator with an average terrestrial coverage area of 12 km2 and a coverage radius of ca. 2.0 km (i.e., smaller in cities, ~1.3 km, larger in rural areas, ~2.7 km). Assume that the average cost of ownership related only to the passive part of the site is 20+ thousand euros and that 50% of the 30k sites (expect a higher number) would be redundant as the rural coverage would be replaced by stratospheric drones. Such a site reduction quantum conservatively would lead to a minimum gross monetary reduction of 300 million euros annually (not considering the cost of the alternative technology coverage solution).

In our example, the question is whether we can operate a stratospheric drone-based platform covering rural Germany for less than 300 million euros yearly. Let’s examine this question. Say the stratospheric drone price is 1 million euros per piece (similar to the current Starlink satellite price, excluding the launch cost, which would add another 1.1 million euros to the satellite cost). For redundancy and availability purposes, we assume we need 100 stratospheric drones to cover rural Germany, allowing me to decommission in the radius of 15 thousand rural terrestrial sites. The decommissioning cost and economical right timing of tower contract termination need to be considered. Due to the standard long-term contracts may be 5 (optimistic) to 10+ years (realistic) year before the rural network termination could be completed. Many Telecom businesses that have spun out their passive site infrastructure have done so in mutual captivity with the Tower management company and may have committed to very “sticky” contracts that have very little flexibility in terms of site termination at scale (e.g., 2% annually allowed over total portfolio).

We have a capital expense of 100 million for the stratospheric drones. We also have to establish the support infrastructure (e.g., ground stations, airfield suitability rework, development, …), and consider operational expenses. The ballpark figure for this cost would be around 100 million euros for Capex for establishing the supporting infrastructure and another 30 million euros in annual operational expenses. In terms of steady-state Capex, it should be at most 20 million per year. In our example, the terrestrial rural network would have cost 3 billion euros, mainly Opex, over ten years compared to 700 million euros, a little less than half as Opex, for the stratospheric drone-based platform (not considering inflation).

The economical requirements of a stratospheric unmanned and autonomous drone-based coverage platform should be superior compared to the current cellular terrestrial coverage platform. As the stratospheric coverage platform scales and increasingly more stratospheric drones are deployed, the unit price is also likely to reduce accordingly.

Spectrum usage rights yet another critical piece.

It should be emphasized that the deployment of cellular frequency spectrum in stratospheric and LEO satellite contexts is governed by a combination of technical feasibility, regulatory frameworks, coordination to prevent interference, and operational needs. The ITU, along with national regulatory bodies, plays a central role in deciding the operational possibilities and balancing the needs and concerns of various stakeholders, including satellite operators, terrestrial network providers, and other spectrum users. Today, there are many restrictions and direct regulatory prohibitions in repurposing terrestrially assigned cellular frequencies for non-terrestrial purposes.

The role of the World Radiocommunications Conference (WRC) role is pivotal in managing the global radio-frequency spectrum and satellite orbits. Its decisions directly impact the development and deployment of various radiocommunication services worldwide, ensuring their efficient operation and preventing interference across borders. The WRC’s work is fundamental to the smooth functioning of global communication networks, from television and radio broadcasting to cellular networks and satellite-based services. The WRC is typically held every three to four years, with the latest one, WRC-23, held in Dubai at the end of 2023, reference [13] provides the provisional final acts of WRC-23 (December 2023). In landmark recommendation, WRC-23 relaxed the terrestrial-only conditions for the 698 to 960 MHz and 1,71 to 2.17 GHz, and 2.5 to 2.69 GHz frequency bands to also apply for high-altitude platform stations (HAPS) base stations (“Antennas-in -Sky”). It should be noted that there are slightly different frequency band ranges and conditions, depending on which of the three ITU-R regions (as well as exceptions for particular countries within a region) the system will be deployed in. Also the HAPS systems do not enjoy protection or priority over existing use of those frequency bands terrestrially. It is important to note that the WRC-23 recommendation only apply to coverage platforms (i.e., HAPS) in the range from 20 to 50 km altitude. These WRC-23 frequency-bands relaxation does not apply to satellite operation. With the recognized importance of non-terrestrial networks and the current standardization efforts (e.g., towards 6G), it is expected that the fairly restrictive regime on terrestrial cellular spectrum may be relaxed further to also allow mobile terrestrial spectrum to be used in “Antenna-in-the-Sky” coverage platforms. Nevertheless, HAPS and terrestrial use of cellular frequency spectrum will have to be coordinated to avoid interference and resulting capacity and quality degradation.

SoftBank announced recently (i.e., 28 December 2023 [11]), after deliberations at the WRC-23, that they had successfully gained approval within the Asia-Pacific region (i.e., ITU-R region 3) to use mobile spectrum bands, namely 700-900MHz, 1.7GHz, and 2.5GHz, for stratospheric drone-based mobile broadband cellular services (see also refs. [13]). As a result of this decision, operators in different countries and regions will be able to choose a spectrum with greater flexibility when they introduce HAPS-based mobile broadband communication services, thereby enabling seamless usage with existing smartphones and other devices.

Another example of re-using terrestrial licensed cellular spectrum above ground is SpaceX direct-to-cell capable 2nd generation Starlink satellites.

On January 2nd, 2024, SpaceX launched their new generation of Starlink satellites with direct-to-cell capabilities to close a connection to a regular mobile cellular phone (e.g., smartphone). The new direct-to-cell Starlink satellites use T-Mobile US terrestrial licensed cellular frequency band (i.e., 2×5 MHz Band 25, PCS G-block) and will work, according to T-Mobile US, with most of their existing mobile phones. The initial direct-to-cell commercial plans will only support low-bandwidth text messaging and no voice or more bandwidth-heavy applications (e.g., streaming). Expectations are that the direct-to-cell system would deliver up to 18.3 Mbps (3.66 Mbps/MHz/cell) downlink and up to 7.2 Mbps (1.44 Mbps/MHz/cell) uplink over a channel bandwidth of 5 MHz (maximum).

Given that terrestrial 4G LTE systems struggle with such performance, it will be super interesting to see what the actual performance of the direct-to-cell satellite constellation will be.

COMPARISON WITH LEO SATELLITE BROADBAND NETWORKS.

When juxtaposed with LEO satellite networks such as Starlink (SpaceX), OneWeb (Eutelsat Group), or Kuiper (Amazon), stratospheric drones offer several advantages. Firstly, the proximity to the Earth’s surface (i.e., 300 – 2,000 km) results in lower latency, a critical factor for real-time applications. While LEO satellites, like those used by Starlink, have reduced latency (ca. 3 ms round-trip-time) compared to traditional geostationary satellites (ca. 240 ms round-trip-time), stratospheric drones can provide even quicker response times (one-tenth of an ms in round-trip-time), making the stratospheric drone substantially more beneficial for applications such as emergency services, telemedicine, and high-speed internet services.

A stratospheric platform operating at 20 km altitude and targeting surveillance, all else being equal, would be 25 times better at distinguishing objects apart than an LEO satellite operating at 500 km altitude. The global aerial imaging market is expected to exceed 7 billion euros by 2030, with a CAGR of 14.2% from 2021. The flexibility of the stratospheric drone platform allows for combining cellular broadband services and a wide range of advanced aerial imaging services. Again, it is advantageous that the stratospheric drone regularly returns to Earth for fueling, maintenance, and technology upgrades and enhancements. This is not possible with an LEO satellite platform.

Moreover, the deployment and maintenance of stratospheric drones are, in theory, less complex and costly than launching and maintaining a constellation of satellites. While Starlink and similar projects require significant upfront investment for satellite manufacturing and rocket launches, stratospheric drones can be deployed at a fraction of the cost, making them a more economically viable option for many applications.

The Starlink LEO satellite constellation currently is the most comprehensive satellite (fixed) broadband coverage service. As of November 2023, Starlink had more than 5,000 satellites in low orbit (i.e., ca. 550 km altitude), and an additional 7,000+ are planned to be deployed, with a total target of 12+ thousand satellites. The current generation of Starlink satellites has three downlink phased-array antennas and one uplink phase-array antenna. This specification translates into 48 beams downlink (satellite to ground) and 16 beams uplink (ground to satellite). Each Starlink beam covers approx. 2,800 km2 with a coverage range of ca. 30 km, over which a 250 MHz downlink channel (in the Ku band) has been assigned. According to Portillo et al. [14], the spectral efficiency is estimated to be 2.7 Mbps per MHz, providing a total throughput of a maximum of 675 Mbps in the coverage area or a throughput density of ca. 0.24 Mbps per km2.

According to the latest Q2-2023 Ookla speed test it is found that “among the 27 European countries that were surveyed, Starlink had median download speeds greater than 100 Mbps in 14 countries, greater than 90 Mbps in 20 countries, and greater than 80 in 24 countries, with only three countries failing to reach 70 Mbps” (see reference [18]). Of course, the actual customer experience will depend on the number of concurrent users demanding resources from the LEO satellite as well as weather conditions, proximity of other users, etc. Starlink themselves seem to have set an upper limit of 220 Mbps download speed for their so-called priority service plan or otherwise 100 Mbps (see [19] below). Quite impressive performance if there are no other broadband alternatives available.

According to Elon Musk, SpaceX aims to reduce each Starlink satellite’s cost to less than one million euros. However, according to Elon Musk, the unit price will depend on the design, capabilities, and production volume. The launch cost using the SpaceX Falcon 9 launch vehicle starts at around 57 million euros, and thus, the 50 satellites would add a launch cost of ca. 1.1 million euros per satellite. SpaceX operates, as of September 2023, 150 ground stations (“Starlink Gateways”) globally that continue to connect the satellite network with the internet and ground operations. At Starlink’s operational altitude, the estimated satellite lifetime is between 5 and 7 years due to orbital decay, fuel and propulsion system exhaustion, and component durability. Thus, a LEO satellite business must plan for satellite replacement cycles. This situation differs greatly from the stratospheric drone-based operation, where the vehicles can be continuously maintained and upgraded. Thus, they are significantly more durable, with an expected useful lifetime exceeding ten years and possibly even 20 years of operational use.

Let’s consider our example of Germany and what it would take to provide LEO satellite coverage service targeting rural areas. It is important to understand that a LEO satellite travels at very high speeds (e.g., upwards of 30 thousand km per hour) and thus completes an orbit around Earth in between 90 to 120 minutes (depending on the satellite’s altitude). It is even more important to remember that Earth rotates on its axis (i.e., 24 hours for a full rotation), and the targeted coverage area will have moved compared to a given satellite orbit (this can easily be several hundreds to thousands of kilometers). Thus, to ensure continuous satellite broadband coverage of the same area on Earth, we need a certain number of satellites in a particular orbit and several orbits to ensure continuous coverage at a target area on Earth. We would need at least 210 satellites to provide continuous coverage of Germany. Most of the time, most satellites would not cover Germany, and the operational satellite utilization will be very low unless other areas outside Germany are also being serviced.

Economically, using the Starlink numbers above as a guide, we incur a capital expense of upwards of 450 million euros to realize a satellite constellation that could cover Germany. Let’s also assume that the LEO satellite broadband operator (e.g., Starlink) must build and launch 20 satellites annually to maintain its constellation and thus incur an additional Capex of ca. 40+ million euros annually. This amount does not account for the Capex required to build the ground network and the operations center. Let’s say all the rest requires an additional 10 million euros Capex to realize and for miscellaneous going forward. The technology-related operational expenses should be low, at most 30 million euros annually (this is a guesstimate!) and likely less. So, covering Germany with an LEO broadband satellite platform over ten years would cost ca. 1.3 billion euros. Although substantially more costly than our stratospheric drone platform, it is still less costly than running a rural terrestrial mobile broadband network.

Despite being favorable compared in economic to the terrestrial cellular network, it is highly unlikely to make any operational and economic sense for a single operator to finance such a network, and it would probably only make sense if shared between telecom operators in a country and even more so over multiple countries or states (e.g., European Union, United States, PRC, …).

Despite the implied silliness of a single mobile operator deploying a satellite constellation for a single Western European country (irrespective of it being fairly large), the above example serves two purposes; (1) To illustrates how economically in-efficient rural mobile networks are that a fairly expansive satellite constellation could be more favorable. Keep in mind that most countries have 3 or 4 of them, and (2) It also shows that the for operators to share the economics of a LEO satellite constellation over larger areal footprint may make such a strategy very attractive economically,

Due to the path loss at 550 km (LEO) being substantially higher than at 20 km (stratosphere), all else being equal, the signal quality of the stratospheric broadband drone would be significantly better than that of the LEO satellite. However, designing the LEO satellite with more powerful transmitters and sensitive receivers can compensate for the factor of almost 30 in altitude difference to a certain extent. Clearly, the latency performance of the LEO satellite constellation would be inferior to that of the stratospheric drone-based platform due to the significantly higher operating altitude.

It is, however, the capacity rather than shared cost could be the stumbling block for LEOs: For a rural cellular network or stratospheric drone platform, we see the MNOs effectively having “control” over the capex costs of the network, whether it be the RAN element for a terrestrial network, or the cost of whole drone network (even if it in the future, this might be able to become a shared cost).

However, for the LEO constellation, we think the economics of a single MNO building a LEO constellation even for their own market is almost entirely out of the question (ie multiple €bn capex outlay). Hence, in this situation, the MNOs will rely on a global LEO provider (ie Starlink, or AST Space Mobile) and will “lend” their spectrum to their in their respective geography in order to provide service. Like the HAPs, this will also require further regulatory approvals in order to free up terrestrial spectrum for satellites in rural areas.

We do not yet have the visibility of the payments the LEOs will require, so there is the potential that this could be a lower cost alternative again to rural networks, but as we show below, we think the real limitation for LEOs might not be the shared capacity rental cost, but that there simply won’t be enough capacity available to replicate what a terrestrial network can offer today.

However, the stratospheric drone-based platform provides a near-ideal cellular performance to the consumer, close to the theoretical peak performance of a terrestrial cellular network. It should be emphasized that the theoretical peak cellular performance is typically only experienced, if at all, by consumers if they are very near the terrestrial cellular antenna and in a near free-space propagation environment. This situation is a very rare occurrence for the vast majority of mobile consumers.

Figure 7 summarizes the above comparison between a rural terrestrial cellular network with the non-terrestrial cellular networks such as LEO satellites and Stratospheric drones.

While the majority of the 5,500+ Starlink constellation is 13 GHz (Ku-band), at the beginning of 2024, Space X launched a few 2nd generation Starlink satellites that support direct connections from the satellite to a normal cellular device (e.g., smartphone), using 5 MHz of T-Mobile USA’s PCS band (1900 MHz). The targeted consumer service, as expressed by T-Mobile USA, is providing texting capabilities over areas with no or poor existing cellular coverage across the USA. This is fairly similar to services at similar cellular coverage areas presently offered by, for example, AST SpaceMobile, OmniSpace, and Lynk Global LEO satellite services with reported maximum speed approaching 20 Mbps. The so-called Direct-2-Device, where the device is a normal smartphone without satellite connectivity functionality, is expected to develop rapidly over the next 10 years and continue to increase the supported user speeds (i.e., utilized terrestrial cellular spectrum) and system capacity in terms of smaller coverage areas and higher number of satellite beams.

Table 1 below provides an overview of the top 10 LEO satellite constellations targeting (fixed) internet services (e.g., Ku band), IoT and M2M services, and Direct-to-Device (or direct-to-cell) services. The data has been compiled from the NewSpace Index website, which should be with data as of 31st of December 2023. The Top-10 satellite constellation rank has been based on the number of launched satellites until the end of 2023. Two additional Direct-2-Cell (D2C or Direct-to-Device, D2D) LEO satellite constellations are planned for 2024-2025. One is SpaceX Starlink 2nd generation, which launched at the beginning of 2024, using T-Mobile USA’s PCS Band to connect (D2D) to normal terrestrial cellular handsets. The other D2D (D2C) service is Inmarsat’s Orchestra satellite constellation based on L-band (for mobile terrestrial services) and Ka for fixed broadband services. One new constellation (Mangata Networks) targeting 5G services. With two 5G constellations already launched, i.e., Galaxy Space (Yinhe) launched 8 LEO satellites, 1,000 planned using Q- and V-bands (i.e., not a D2D cellular 5G service), and OmniSpace launched two satellites and have planned 200 in total. Moreover, currently, there is one planned constellation targeting 6G by the South Korean Hanwha Group (a bit premature, but interesting nevertheless) with 2,000 6G LEO Satellites planned. Most currently launched and planned satellite constellations offering (or plan to provide) Direct-2-Cell services, including IoT and M2M, are designed for low-frequency bandwidth services that are unlikely to compete with terrestrial cellular networks’ quality of service where reasonable good coverage (or better) exists.

In Table 1 below, we then show 5 different services with the key input variables as cell radius, spectral efficiency and downlink spectrum. From this we can derive what the “average” capacity could be per square kilometer of rural coverage.

We focus on this metric as the best measure of capacity available once multiple users are on the service the spectrum available is shared. This is different from “peak” speeds which are only relevant in the case of very few users per cell.

- We start with terrestrial cellular today for bands up to 2.1GHz and show that assuming a 2.5km cell radius, the average capacity is equivalent to 11Mbps per sq.km.

- For a LEO service using Ku-band, i.e., with 250MHz to an FWA dish, the capacity could be ca. 2Mbps per sq.km.

- For a LEO-based D2D device, what is unknown is what the ultimate spectrum allowance could be for satellite services with cellular spectrum bands, and spectral efficiency. Giving the benefit of the doubt on both, but assuming the beam radius is always going to be larger, we can get to an “optimistic” future target of 2Mbps per sq. km, i.e., 1/5th of a rural terrestrial network.

- Finally, we show for a stratospheric drone, that given similar cell radius to a rural cell today, but with higher downlink available and greater spectral efficiency, we can reach ca. 55Mbps per sq. km, i.e. 5x what a current rural network can offer.

INTEGRATING WITH 5G AND BEYOND.

The advent of 5G, and eventually 6G, technology brings another dimension to the utility of stratospheric drones delivering mobile broadband services. The high-altitude platform’s ability to seamlessly integrate with existing 5G networks makes them an attractive option for expanding coverage and enhancing network capacity at superior economics, particularly in rural areas where the economics for terrestrial-based cellular coverage tend to be poor. Unlike terrestrial networks that require extensive groundwork for 5G rollout, the non-terrestrial network operator (NTNO) can rapidly deploy stratospheric drones to provide immediate 5G coverage over large areas. The high-altitude platform is also incredibly flexible compared to both LEO satellite constellations and conventional rural cellular network flexibility. The platform can easily be upgraded during its ground maintenance window and can be enhanced as the technology evolves. For example, upgrading to and operationalizing 6G would be far more economical with a stratospheric platform than having to visit thousands or more rural sites to modernize or upgrade the installed active infrastructure.

SUMMARY.

Stratospheric drones represent a significant advancement in the realm of wireless communication. Their strategic positioning in the stratosphere offers superior coverage and connectivity compared to terrestrial networks and low-earth satellite solutions. At the same time, their economic efficiency makes them an attractive alternative to ground-based infrastructures and LEO satellite systems. As technology continues to evolve, these high-altitude platforms (HAPs) are poised to play a crucial role in shaping the future of global broadband connectivity and ultra-high availability connectivity solutions, complementing the burgeoning 5G networks and paving the way for next-generation three-dimensional communication solutions. Moving away from today’s flat-earth terrestrial-locked communication platforms.

The strategic as well as the disruptive potential of the unmanned autonomous stratospheric terrestrial coverage platform is enormous, as shown in this article. It has the potential to make most of the rural (at least) cellular infrastructure redundant, resulting in substantial operational and economic benefits to existing mobile operators. At the same time, the HAPs could, in rural areas, provide much better service overall in terms of availability, improved coverage, and near-ideal speeds compared to what is the case in today’s cellular networks. It might also, at scale, become a serious competitive and economical threat to LEO satellite constellations, such as, for example, Starlink and Kuipers, that would struggle to compete on service quality and capacity compared to a stratospheric coverage platform.

Although the strategic, economic, as well as disruptive potential of the unmanned autonomous stratospheric terrestrial coverage platform is enormous, as shown in this article, the flight platform and advanced antenna technology are still in a relatively early development phase. Substantial regulatory work remains in terms of permitting the terrestrial cellular spectrum to be re-used above terra firma at the “Antenna-in-the-Sky. The latest developments out of WRC-23 for Asia Pacific appear very promising, showing that we are moving in the right direction of re-using terrestrial cellular spectrum in high-altitude coverage platforms. Last but not least, operating an unmanned (autonomous) stratospheric platform involves obtaining certifications as well as permissions and complying with various flight regulations at both national and international levels.

Terrestrial Mobile Broadband Network – takeaway:

- It is the de facto practice for mobile cellular networks to cover nearly 100% geographically. The mobile consumer expects a high-quality, high-availability service everywhere.

- A terrestrial mobile network has a relatively low area coverage per unit antenna with relatively high capacity and quality.

- Mobile operators incur high and sustainable infrastructure costs, especially in rural areas with low or no return on that cost.

- Physical obstructions and terrain limit performance (i.e., non-free space characteristics).

- Well-established technology with high reliability.

- The potential for high bandwidth and low latency in urban areas with high demand may become a limiting factor for LEO satellite constellations and stratospheric drone-based platforms. Thus, it is less likely to provide operational and economic benefits covering high-demand, dense urban, and urban areas.

LEO Satellite Network – takeaway:

- The technology is operational and improving. There is currently some competition (e.g., Starlink, Kuiper, OneWeb, etc.) in this space, primarily targeting fixed broadband and satellite backhaul services. Increasingly, new LEO satellite-based business models are launched providing lower-bandwidth cellular-spectrum based direct-to-device (D2D) text, 4G and 5G services to regular consumer and IoT devices (i.e., Starlink, Lynk Global, AST SpaceMobile, OmniSpace, …).

- Broader coverage, suitable for global reach. It may only make sense when the business model is viewed from a worldwide reach perspective (e.g., Starlink, OneWeb,…), resulting in much-increased satellite network utilization.

- An LEO satellite broadband network can cover a vast area per satellite due to its high altitude. However, such systems are in nature capacity-limited, although beam-forming antenna technologies (e.g., phased array antennas) allow better capacity utilization.

- The LEO satellite solutions are best suited for low-population areas with limited demand, such as rural and largely unpopulated areas (e.g., sea areas, deserts, coastlines, Greenland, polar areas, etc.).

- Much higher latency compared to terrestrial and drone-based networks.

- Less flexible once in orbit. Upgrades and modernization only via replacement.

- The LEO satellite has a limited useful operational lifetime due to its lower orbital altitude (e.g., 5 to 7 years).

- Lower infrastructure cost for rural coverage compared to terrestrial networks, but substantially higher than drones when targeting regional areas (e.g., Germany or individual countries in general).

- Complementary to the existing mobile business model of communications service providers (CSPs) with a substantial business risk to CSPs in low-population areas where little to no capacity limitations may occur.

- Requires regulatory permission (authorization) to operate terrestrial frequencies on the satellite platform over any given country. This process is overseen by national regulatory bodies in coordination with the International Telecommunication Union (ITU) as well as national regulators (e.g., FCC in the USA). Satellite operators must apply for frequency bands for uplink and downlink communications and coordinate with the ITU to avoid interference with other satellites and terrestrial systems. In recent years, however, there has been a trend towards more flexible spectrum regulations, allowing for innovative uses of the spectrum like integrating terrestrial and satellite services. This flexibility is crucial in accommodating new technologies and service models.

- Operating a LEO satellite constellation requires a comprehensive set of permissions and certifications that encompass international and national space regulations, frequency allocation, launch authorization, adherence to space debris mitigation guidelines, and various liability and insurance requirements.

- Both LEO and MEO satellites is likely going to be complementary or supplementary to stratospheric drone-based broadband cellular networks offering high-performing transport solutions and possible even acts as standalone or integrated (with terrestrial networks) 5G core networks or “clouds-in-the-sky”.

Stratospheric Drone-Based Network – takeaway:

- It is an emerging technology with ongoing research, trials, and proof of concept.

- A stratospheric drone-based broadband network will have lower deployment costs than terrestrial and LEO satellite broadband networks.

- In rural areas, the stratospheric drone-based broadband network offers better economics and near-ideal quality than terrestrial mobile networks. In terms of cell size and capacity, it can easily match that of a rural mobile network.

- The solution offers flexibility and versatility and can be geographically repositioned as needed. The versatility provides a much broader business model than “just” an alternative rural coverage solution (e.g., aerial imaging, surveillance, defense scenarios, disaster area support, etc.).

- Reduced latency compared to LEO satellites.

- Also ideal for targeted or temporary coverage needs.

- Complementary to the existing mobile business model of communications service providers (CSPs) with additional B2B and public services business potential from its application versatility.

- Potential substantial negative impact on the telecom tower business as the stratospheric drone-based broadband network would make (at least) rural terrestrial towers redundant.

- May disrupt a substantial part of the LEO satellite business model due to better service quality and capacity leaving the LEO satellite constellations revenue pool to remote areas and specialized use cases.

- Requires regulatory permission to operate terrestrial frequencies (i.e., frequency authorization) on the stratospheric drone platform (similar to LEO satellites). Big steps have are already been made at the latest WRC-23, where the frequency bands 698 to 960 MHz, 1710 to 2170 MHz, and 2500 to 2690 MHz has been relaxed to allow for use in HAPS operating at 20 to 50 km altitude (i.e., the stratosphere).

- Operating a stratospheric platform in European airspace involves obtaining certifications as well as permissions and (of course) complying with various regulations at both national and international levels. This includes the European Union Aviation Safety Agency (EASA) type certification and the national civil aviation authorities in Europe.

FURTHER READING.

- New Street Research “Stratospheric drones: A game changer for rural networks?” (January 2024).

- https://hapsalliance.org/

- https://www.stratosphericplatforms.com/, see also “Beaming 5G from the stratosphere” (June, 2023) and “Cambridge Consultants building the world’s largest commercial airborne antenna” (2021).

- Iain Morris, “Deutsche Telekom bets on giant flying antenna”, Light Reading (October 2020).

- “Deutsche Telekom and Stratospheric Platforms Limited (SPL) show Cellular communications service from the Stratosphere” (November 2020).

- “High Altitude Platform Systems: Towers in the Skies” (June 2021).

- “Stratospheric Platforms successfully trials 5G network coverage from HAPS vehicle” (March 2022).

- Leichtwerk AG, “High Altitude Platform Stations (HAPS) – A Future Key Element of Broadband Infrastructure” (2023). I recommend to closely follow Leichtwerk AG which is a world champion in making advanced gliding planes. The hydrogen powered StratoStreamer HAP is near-production ready, and they are currently working on a solar-powered platform. Germany is renowned for producing some of the best gliding planes in the world (after WWII Germany was banned from developing and producing aircrafts, military as well as civil. These restrictions was only relaxed in the 60s). Germany has a long and distinguished history in glider development, dating back to the early 20th century. German manufacturers like Schleicher, Schempp-Hirth, and DG Flugzeugbau are among the world’s leading producers of high-quality gliders. These companies are known for their innovative designs, advanced materials, and precision engineering, contributing to Germany’s reputation in this field.

- Jerzy Lewandowski, “Airbus Aims to Revolutionize Global Internet Access with Stratospheric Drones” (December 2023).

- Utilities One, “An Elevated Approach High Altitude Platforms in Communication Strategies”, (October 2023).

- Rajesh Uppal, “Stratospheric drones to provide 5g wireless communications global internet border security and military surveillance” (May 2023).

- Softbank, “SoftBank Corp.-led Proposal to Expand Spectrum Use for HAPS Base Stations Agreed at World Radiocommunication Conference 2023 (WRC-23)”, press release (December 2023).

- ITU Publication, World Radiocommunications Conference 2023 (WRC-23), Provisional Final Acts, (December 2023). Note 1: The International Telecommunication Union (ITU) divides the world into three regions for the management of radio frequency spectrum and satellite orbits: Region 1: includes Europe, Africa, the Middle East west of the Persian Gulf including Iraq, the former Soviet Union, and Mongolia, Region 2: covers the Americas, Greenland, and some of the eastern Pacific Islands, and Region 3: encompasses Asia (excl. the former Soviet Union), Australia, the southwest Pacific, and the Indian Ocean’s islands.

- Geoff Huston, “Starlink Protocol Performance” (November 2023). Note 2: The recommendations, such as those designated with “ADD” (additional), are typically firm in the sense that they have been agreed upon by the conference participants. However, they are subject to ratification processes in individual countries. The national regulatory authorities in each member state need to implement these recommendations in accordance with their own legal and regulatory frameworks.

- Curtis Arnold, “An overview of how Starlink’s Phased Array Antenna “Dishy McFlatface” works.”, LinkedIn (August 2023).

- Quora, “How much does a satellite cost for SpaceX’s Starlink project and what would be the cheapest way to launch it into space?” (June 2023).

- The Clarus Network Group, “Starlink v OneWeb – A Comprehensive Comparison” (October 2023).

- Brian Wang, “SpaceX Launches Starlink Direct to Phone Satellites”, (January 2024).

- Sergei Pekhterev, “The Bandwidth Of The StarLink Constellation…and the assessment of its potential subscriber base in the USA.”, SatMagazine, (November 2021).

- I. del Portillo et al., “A technical comparison of three low earth orbit satellite constellation systems to provide global broadband,” Acta Astronautica, (2019).

- Nils Pachler et al., “An Updated Comparison of Four Low Earth Orbit Satellite Constellation Systems to Provide Global Broadband” (2021).

- Shkelzen Cakaj, “The Parameters Comparison of the “Starlink” LEO Satellites Constellation for Different Orbital Shells” (May 2021).

- Mike Puchol, “Modeling Starlink capacity” (October 2022).

- Mike Dano, “T-Mobile and SpaceX want to connect regular phones to satellites”, Light Reading (August 2022).

- Starlink, “SpaceX sends first text message via its newly launched direct to cell satellites” (January 2024).

- GSMA.com, “New Speedtest Data Shows Starlink Performance is Mixed — But That’s a Good Thing” (2023).

- Starlink, “Starlink specifications” (Starlink.com page).

- AST SpaceMobile website: https://ast-science.com/ Constellation Areas: Internet, Direct-to-Cell, Space-Based Cellular Broadband, Satellite-to-Cellphone. 243 LEO satellites planned. 2 launched.

- Lynk Global website: https://lynk.world/ (see also FCC Order and Authorization). It should be noted that Lynk can operate within 617 to 960 MHz (Space-to-Earth) and 663 to 915 MHz (Earth-to-Space). However, only outside the USA. Constellation Area: IoT / M2M, Satellite-to-Cellphone, Internet, Direct-to-Cell. 8 LEO satellites out of 10 planned.

- Omnispace website: https://omnispace.com/ Constellation Area: IoT / M2M, 5G. World’s first global 5G non terrestrial network. Initial support 3GPP-defined Narrow-Band IoT radio interface. Planned 200 LEO and <15 MEO satellites. So far only 2 satellites launched.

- NewSpace Index: https://www.newspace.im/ I find this resource having excellent and up-to date information of commercial satellite constellations.

- Wikipedia, “Satellite constellation”.

- LEOLABS Space visualization – SpaceX Starlink mapping. (deselect “Debris”, “Beams”, and “Instruments”, and select “Follow Earth”). An alternative visualization service for Starlink & OneWeb satellites is the website Satellitemap.space (you might go to settings and turn on signal Intensity which will give you the satellite coverage hexagons).

- European Union Aviation Safety Agency (EASA). Note that an EASA-type Type Certificate is a critical document in the world of aviation. This certificate is a seal of approval, indicating that a particular type of aircraft, engine, or aviation component meets all the established safety and environmental standards per EASA’s stringent regulations. When an aircraft, engine, or component is awarded an EASA Type Certificate, it signifies a thorough and rigorous evaluation process that it has undergone. This process assesses everything from design and manufacturing to performance and safety aspects. The issuance of the certificate confirms that the product is safe for use in civil aviation and complies with the necessary airworthiness requirements. These requirements are essential to ensure aircraft operating in civil airspace safety and reliability. Beyond the borders of the European Union, an EASA Type Certificate is also highly regarded globally. Many countries recognize or accept these certificates, which facilitate international trade in aviation products and contribute to the global standardization of aviation safety.

ACKNOWLEDGEMENT.

I greatly acknowledge my wife, Eva Varadi, for her support, patience, and understanding during the creative process of writing this article.

I also owe a lot of gratitude to James Ratzer, Partner at New Street Research, for editorial suggestions, great discussions and challenges making the paper far better than it otherwise would have been. I would also like to thank Russel Waller, Pan European Telecoms and ESG Equity Analyst at New Street Research, for being supportive and insistent to get something written for NSR.

I also greatly appreciate my past collaboration and the many discussions on the topic of Stratospheric Drones in particular and advanced antenna designs and properties in general that I have had with Dr. Jaroslav Holis, Senior R&D Manager (Group Technology, Deutsche Telekom AG) over the last couple of years. When it comes to my early involvement in Stratospheric Drones activities with Group Technology Deutsche Telekom AG, I have to recognize my friend, mentor, and former boss, Dr. Bruno Jacobfeuerborn, former CTO Deutsche Telekom AG and Telekom Deutschland, for his passion and strong support for this activity since 2015. My friend and former colleague Rachid El Hattachi deserves the credit for “discovering” and believing in the opportunities that a cellular broadband-based stratospheric drone brings to the telecom industry.

Many thanks to CEO Dr. Reiner Kickert of Leichtwerk AG for providing some high resolution pictures of his beautiful StratoStreamer.

Thanks to my friend Amit Keren for suggesting a great quote that starts this article.

Any errors or unclarities are solely due to myself and not the collaborators and colleagues that have done their best to support this piece.